I am quite unsure about how this will work on touchscreen devices. Like if i drag something with one finger on a phone and place another finger on the screen. Will it just suddenly appear at the other finger as this is the case with most feature which includes moving objects with mouse before this update.

@Testbot379 DragDetector policy is that only one player or finger may drag it at a time.

So once you touch a DragDetector with one finger, that finger ‘owns’ the drag until it lets go. The second finger will have no effect. (At some point we may add the ability for two players or fingers to drag at the same time, but if we do that it will be more like pushing a brick from both ends at once, without any jump. In the interim, you can get a similar effect by welding two separate handles-with-dragdetectors to the same object, allowing two players to move it together)

Note that you CAN move multiple objects with individual fingers simultaneously, so long as they contain separate DragDetectors

Hello again @LadyAka,

We were able to reproduce your bug. What I failed to grasp was that after enable/disable, the first player could still drag the object, but OTHER players could not! @DeFactoCepti helped me understand.

He has checked in a fix for the issue, and if all goes well, the fix should be live late next week.

thanks so much for reporting this!

Thank you. sorry for checking late, I was really busy this week. I was about to make a repo file but I’m glad you were able to figure it out!

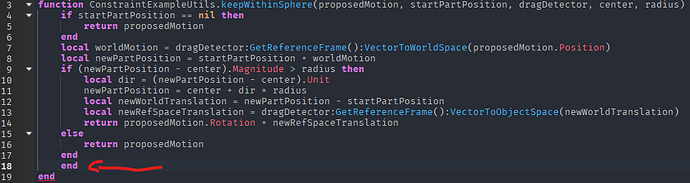

Little info about a typo you made in one of the scripts on DragDetectors TestWorld 2 - Roblox

Script: workspace.ConstraintAndScriptableDragStyleExamples.ConstraintExampleUtils

Line: 18

Reason: Too many end.

Could someone help me in my case?

EDIT: problem solved!

I have this current setup:

Setup in workspace

![]()

DragDetector properties

LocalScript parented to StarterPlayerScripts

local Detector: DragDetector = workspace:WaitForChild("TestPartDrag"):WaitForChild("DragDetector") :: DragDetector

Detector:AddConstraintFunction(1000, function(ProposedMotion: CFrame): CFrame

warn((CFrame.new(0, 0, 0) * ProposedMotion.Rotation).Position)

return CFrame.new(0, 0, 0) * ProposedMotion.Rotation

end)

Detector:GetPropertyChangedSignal("DragFrame"):Connect(function(): ()

print(Detector.DragFrame.Position)

end)

Result

Why does my DragFrame have a position, whereas I only send the ProposedMotion.Rotation (with a blank position in another CFrame)?

Repro place: repro.rbxl (54.9 KB)

I noted that, the higher the part is height-wise, the “higher” it’ll spin around

I may have some misconceptions in CFrame understanding, but I think I am correct in this example still

This is what made me think my logic is right by the way.

@PrinceTybalt i’ve messaged you and sent a friend request so you can have a look at my place.

Thanks for letting me know. It’s all fixed now.

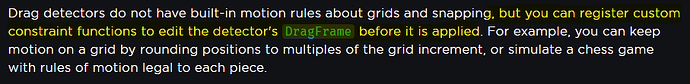

@Varonex_0 it will take me a little while to work up a full answer with examples, but the crux of the issue is that the frames passed in and out of the constraint function are motion expressed in worldspace, not reference space.

Since the object is away from the origin, a rotation matrix with no translation will orbit the object around the origin of the workspace. The extra translation is to put it back at the referenceInstance origin.

I will work up a proper example for how to, say, clamp the rotations in increments of 10 degrees and post it. It’s going. to involve [a] setting the referenceInstance of the dragDetector; otherwise that frame is based on the part and it’s going to rotate every time you finish a new drag [b] casting the incoming proposed motion to reference space [c] clamping the motion, and [d] casting the result back out into worldspace.

Gosh this sounds messy. Sounds like we should have an option for registering a constraint function that operates relative to the referenceFrame.

I will test this out.

I am not a huge friend of vectorial spaces at all, that’s why your explanation still looks a bit hard to understand ![]()

I will work my way out still !

What I understood from the ProposedMotion you are receiving is that:

-

ProposedMotion.Positionis the translation of thestartCFrame.Positionto the final theoretical position (let’s call itendCFrame.Position) -

ProposedMotion.Rotationis the new rotation of the PVInstance you are moving.

That is why I was sending CFrame.new(0, 0, 0), because I did not want the DragFrame to receive any translation at all. I visualized it as an offset between the startCFrame.Positon and the theoretical endCFrame.Position. That’s what I saw from my tests.

Am I correct in my analogy? If not, please correct me. If yes, then why when I send a CFrame with Vector3.zero as the translation, with rotation, it does that?

I may have some huge misconceptions on CFrames, despite the fact I am completely working my way out in what I’m doing.

EDIT:

Made a test:

local Part: Part = workspace:WaitForChild("TestPartDrag")

local Detector: DragDetector = Part:WaitForChild("DragDetector") :: DragDetector

Detector:AddConstraintFunction(1000, function(ProposedMotion: CFrame): CFrame

warn("ProposedMotion:\t", ProposedMotion.Position, "\r")

print("Custom:\t", ((CFrame.new(0, 0, 0)) * ProposedMotion.Rotation).Position, "\r")

return ProposedMotion

end)

Indeed the ProposedMotion.Position isn’t blank. I cannot figure out why. Anyway, I’ll stick to the normal ProposedMotion since my Drag is only for orientation purposes.

Your intuitions are correct. If you don’t want it to move then a delta position of (0,0,0) is the natural action to take.

The problem is less on your end and more in the way we are expressing the matrices we are giving and receiving from you; and the order we apply the rotation and translation that are bound into that matrix. I need to decide whether we change some behavior; or add new API. And I won’t be certain until I spend a little time on the problem.

In the interim, I might not try working too much with constraints on rotations and stick with constraining translation drag styles, which should work nicely.

Was there ever a Lua implementation of these in testing? I would like to mess with the code and see if I can make it do some cool stuff if possible!

@bigcrazycarboy no, the implementation is in C++.

And the hope was that the API hooks would let you customize things to accomplish what you want.

What are some of the things you’d like to try that are not supported by the DragDetector properties, events, and methods?

That would be lovely to have a function that simplifies all this. Up to you to integrate it.

Once again, thanks for the help !

I wanted to change it pretty fundamentally in order to see if I could bind its input to the motion of an object in 3d space to make a sort of “spring service” for the motion of objects outside of constraints, as well as possibly work with 2D UI or other things. It would be really cool to create a “goal” brick and use the maxforce to adapt the tendency of a different assembly to try to match that goal cframe. I guess that’s generally just the behavior of a gyro but perhaps if it were implemented in Lua it could be applied to camera coding and other things that a gyro isn’t suited for - not to mention, the level of control you could have over it.

Honestly I just saw this was a pretty complex system and I wanted to see if there were any interesting parts of the code I could rip out and use in other ways. Sometimes I know C++ features are developed with Lua prototypes so I just thought I’d ask. Thanks for being pretty active here and replying so quick.

@bigcrazycarboy I’m not 100% clear on what you mean. But you could put a DragDetector under your anchored “goal” brick to move it where you want. And then have a spring with one attachment on your goal brick, and the other attachment on your assembly. Then you’d be pulling your assembly around by a spring; and you would adjust the spring parameters to get tighter/looser motion. If you look at the marionette example in the Test World 2 I do something similar with ropes instead of springs. The handles are anchored and movable. The rest of the marionette is connected and so you can tug it around by the handles.

Is this the sort of thing you mean? If not, perhaps describe in more detail.

We tried to build a powerful and flexible API, so if there are things you can’t do, we’d like to understand why.

As for DragDetectors that work within 2D UI… it’s high on our list. We hope to make that a feature in the future.

Honestly it is really impressive, you guys did a great job with the API design and it feels extremely powerful and customizable to use - great job!

The only thing I could think to add to the existing API would be the ability to “emulate” drag input from a player. Since we cant actually invoke a real “click” from the player (someone had to get creative to make the original version of the xbox mouse before roblox released it officially), there is no actual way to invoke these draggers to work from a localscript without programming the entire physics behavior of the draggers from scratch. It might actually be very useful to have a DragDetector that is totally disabled for use by a player, but instead receives its movement input from a script. This could be useful in cases where a developer wants to add controller support to the draggers. There isn’t a really good way for roblox to generically add controller support because controllers don’t have a mouse, and other solutions may be intrusive. I did notice that if I hold down the right trigger it sends a “click” but if a developer used a proximity prompt to enter a “drag editor” state, they could interpret the controller signals and send corresponding input to the DragDetector, making a much cleaner implementation.

@bigcrazycarboy Thanks for the kind words.

Regarding driving a DragDetector from scripts:

[1] If the parts effected by the DragDetector are anchored, you can edit the DragDetector’s DragFrame property and the object will move to match it. So this is one way you can drive the motion of the object with a script. This SHOULD work with non-anchored objects (using kinematic constraints in Studio and full physics constraints in game) but it does not; we consider this a bug to be fixed, relatively high on our list (but it won’t be fixed before the new year). This would allow you to move the object using physics via a script, and you could direct it exactly where you want to go.

[2] If you wanted to simulate the click-drag-release behavior via a script, how would you express the drag? Via calculating mouse events of a simulated mouse? That sounds hard, and we haven’t yet come up with a way to do this that would feel nice.

[3] So instead, if you wanted a proximity sensor to move a DragDetector, what if you had the key actions edit the DragFrame? For example, you could have a leftArrow button that edits the DragFrame, in increments per press, to move in the direction you consider ‘left’ ; and a rightArrow button to move it right. Or you could use four arrows to move in 4 directions within a plane. this is something you can try right now with anchored objects; and you’ll need to wait for #1 to do this for non-anchored. Attached is a little demo that moves an anchored part in this way, 1 unit in X, when you press ‘E’, Of course you could do this without DragDetectors by editing the Cframe/pivot; so this will all only really be new/different when we fix #1 above. But it gets across the concept.

ProximityPromptDrivingDragDetector.rbxl (56.5 KB)

Is it possible to make a function where if you drag on a part, it gives you another part and its automatically drag that item instead of the previous one you dragged?