I found a workaround. GetPivot() can still retrieve the model’s PrimaryPart position even after it is already parented to nil.

workspace.ChildRemoved:Connect(function(model)

print(model:GetPivot().Position)

end)

I found a workaround. GetPivot() can still retrieve the model’s PrimaryPart position even after it is already parented to nil.

workspace.ChildRemoved:Connect(function(model)

print(model:GetPivot().Position)

end)

welp time to have a week of testing deferred, modified very few lines of code to make it work surprisingly.

Is there a plan to rework any of the Destroying or Removing events to better reflect deferred signaling?

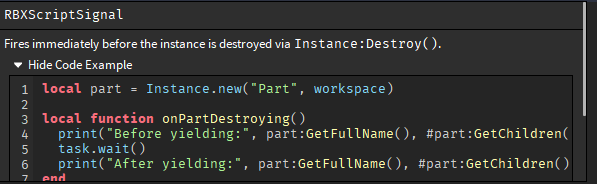

The purpose of these events was to access or listen to something right before it got destroyed or removed, but since any functions connected to these events are going to be executed at a deferred point in the current frame, when they finally get executed, the object will already have been destroyed or removed.

That would be useful for a .Removed or .Destroyed event, but not for a .Removing or .Destroying event whose purpose was to allow us to execute something before it gets destroyed/removed.

Blockquote You should keep in mind that the majority of these affect <10%, sometimes even <1% of experiences in the first place

Where did you get this percentage from?

Also 10% of experiences is a lot since if theres around 5.5 Million experiences that means it effects around 550,000 experiences. That’s quite a lot. I agree with @ChipioIndustries eventually I’m gonna move on to something else, but It’s gonna be likely that my games are gonna break within a few years because of updates like these.

ps. I am not against this update, in fact It’s quite the opposite, I support it. I just have some concerns such as it being forced… unless I’m getting the wrong idea, if I am please correct me.

I think the main problem is that destroying is supposed to run BEFORE the part is destroyed

I don’t know if this is a bug or whether I did something wrong but after testing something the Instance.Destroying signal actually didn’t fire at all on a client-sided script.

I had to use the .Changed signal instead when an object is parented to nil and it felt weird to do it that way.

It kinda is how we used to write clean-up scripts but weird that .Destroying doesn’t fire when a object is destroyed.

Hey, everyone, I’ve been trying to convert my game to using deferred engine events since it is becoming the new standard, but I have had some issues. Specifically, issues with setting variables in scripts, or at least seems to be a recurring issue. Let me show you an example:

![]()

![]()

@WallsAreForClimbing @tnavarts

Have the events that are connected to instance destroying been fixed? If I recall this update broke that, or so I’ve been told.

Also, even if this becomes a new standard, are we still going to have it as an optional flag? I’m much comfortable with the immediate as I’m not aware of any actual improvements that deferred does besides affecting how events are scheduled.

Destroying works, but if the Deferred event gets rolled out, the naming does not make sense anymore.

.Destroying → .Destroyed

.CharacterRemoving → .CharacterRemoved

.PlayerRemoving → .PlayerRemoved

@WallsAreForClimbing @tnavarts What would happen to these names? It’s now misleading in that the part is already destroyed but it’s being indicated as a present tense event.

Yes, it’s likely we’ll need to rename these events and provide additional data about the previous state.

If these events are renamed, will there be new methods that allow developers to take action on instances / players / characters that are about to be destroyed before they are? There are a lot of important use cases that depend on being about to intercept instances prior to the deletion. Without this, it would be making switching to deferred signaling much more difficult.

Would you be able to enumerate some of those cases in a bit more detail? I’m interested in where this crops up the most to understand if we need general purpose APIs or if something more specific for certain use-cases would make sense.

Hey @WallsAreForClimbing, thanks for your patience while I’ve been out of town.

Here are some examples:

There are many other cases where immediate mode signaling are very helpful beyond the Destroying method. For example, in my game, I’m trying to run some logic right before property changes are replicated from Server to Client. For example, I call PropertyChangeAlertRemoteEvent:FireClient() on the server right before setting part.BrickColor = BrickColor.new(“Really red”) and 17 other property changes on the server, and I want to be able to listen to PropertyChangeAlertRemoteEvent.OnClientEvent on the client and immediately run client logic right before the property changes stream in from server to client as part of ReplicationReceiveJobs. Unfortunately PropertyChangeAlertRemoteEvent fires too late due to deferred event signaling.

Thus I would strongly advocate for a general purpose solution for breaking out of deferred signaling when there are specific necessary use cases. Right now there are specific behaviors that are possible with the legacy immediate mode signaling that aren’t possible with deferred signaling. I would love if Roblox could preserve those behaviors, since they are powerful and enable complex state management behavior that are critical for making highly technical games like our game Adopt Me.

Hello!

It’s about my plugin.

If I do

workspace.SignalBehavior = Deferred

that button click event is working

If I do

workspace.SignalBehavior = Immediate

that button click event is not working

Moreover, the rest of the buttons in the plugin work, but these are the ones that are not shown in the video.

it’s about this button event.

DropValueTBClone.MouseButton1Down:Connect(function()

Richtextbox.Text = BtnName

print(BtnName

end)

This happens only for the plugin.

It has been some time since deferred events have been rolled out, and now, I really hope that either, immediate signal behaviour will NEVER be phased out, or that deferred signal behaviour is strait up scrapped

I have encountered too many times issues coming from deferred events. In two of my modules

I encountered another issue, which is really annoying me, just today, here is a recreation of the issue (the issue was with UI, rather than a Part, but it’s the same thing)

local Lock = false

game.Workspace.Part:GetPropertyChangedSignal("CFrame"):Connect(function()

print("CHANGED", Lock)

if Lock then return end -- Ignore the change, if it was done by the script

end)

Lock = true

game.Workspace.Part.CFrame *= CFrame.new(0,1,0)

Lock = false

What this code does is prevent the Changed event from running when the script changes the property. This is useful when listening for that change, but wanting to avoid your own script from triggering it again it.

Deferred signal behaviour has caused some of my code to strait up freeze studio (and probably live games that use it), because, with deferred signals, the property of the object is updated later on, which can cause infinite recursion (the property change causes yet another change to the property, infinity)

This kind of “Lock Variable” is something I have used, not commonly, but still many times, and I am afraid of more things breaking if they switch to deferred

I’ll probably defer changing the Lock variable to false, or store the changed value of the object, and if the value of the object is the same as that value, it will return. These solutions annoy me though, I’m not a fan of them

(I did change it so I defer changing the variable to false, but I need to defer it twice for some reason? This is so scuffed…)

![]()

Here is another example of deferred signals breaking my code Parallel Scheduler - Parallel lua made easy and performant - #14 by Tomi1231

In this case, deferred signal behaviour seemed to defer the initialization of scripts, changing the order of events, and breaking my module. The fix was easy, but it took me some time to figure it out, and I shouldn’t have to defer the firing of my bindable event…

Please PLEASE reconsider this change. It will break so many games, and I don’t want to see yet more old games that don’t work or strait up freeze

I wonder how many of the potential breakage people are mentioning could be due to mixing Signal behavior, or old versions of libraries that haven’t been adapted for it. I previously had designed my Signal library so that goes out of the occasion. GoodSignal is Immediate mode only, and sleitnick’s fork does not adapt to it but rather just gives you an extra function to use which discourages its use IMO, and means devs have to go out of their way to test this which is hard.

It seems really weird to me that people would be relying that much on this kind of behavior from Signals tbh.

Small update (and in case anyone comes here from the front page of Google): we’ve put out some documentation for deferred events here! Let us know if there’s any additional information about deferred events that you’d like to see on that page, or elsewhere in the docs.

Hi! I’ve ran into a bottleneck with updating tons of UI layouts, and notably the most expensive part is the ‘Post-Layout Event Dispatch’ step. I would expect that Deferred mode would have helped with performance greatly here, however I see no difference. In fact, there may be an infinitesimally small increase in the UpdateUILayouts step, as it increases from ~10.3ms to ~10.4ms. This can of course be charted to variable noise, so it’s not as important–but the lack of performance gains is a bit saddening.

Here’s a .rbxl file containing the place I used to reproduce this:

gui_testing.rbxl (67.2 KB)

To repro, just press play and look at the GUI objects that are being resized.

The processing being done in the Post-Layout Event Dispatch step is engine callbacks, not Luau callbacks, so unfortunately Deferred mode won’t net you any performance improvements in that place.

This topic was automatically closed 120 days after the last reply. New replies are no longer allowed.