Hey @panzerv1, we’ve been working on a new Instance type that incorporates a lot of the feedback you gave on the equalizer; keep an eye out for an announcement!

I’m not sure if this question has been asked already since there are a ton of replies, but why is the Volume property of AudioPlayer and AudioFader capped at 3? This is really limiting and feels extremely arbitrary. @ReallyLongArms

oh god that does not sound right

I try connecting a getpropertychangedsignal of rmslevel and peaklevel of audio analyzer but it doesn’t fire anything

level changed but not printed to console

It is run on client

It would be great if there an event specifically for tracking changes instead of using loop to check constantly.

why is the

Volumeproperty ofAudioPlayerandAudioFadercapped at 3? This is really limiting and feels extremely arbitrary.

@Rocky28447 it is a little bit arbitrary, but since the Volume property is in units of amplitude, making something 3x louder can have an outsized impact. With the Sound/SoundGroup Volume property going all the way up to 10x (and VideoFrame going up to 100x ![]() ), it was/is pretty easy to accidentally blow your ears out.

), it was/is pretty easy to accidentally blow your ears out.

If you want to go beyond 3x, you can chain AudioFaders to boost the signal further – but if that is too inconvenient we can revisit the limits.

It would be great if there an event specifically for tracking changes instead of using loop to check constantly.

Hey @lnguyenhoang42, PeakLevel and RmsLevel get updated on a separate audio mixing thread, and we didn’t want to imply that scripts would be able to observe every change, since they happen too quickly. Studio also uses property change signals to determine when it should re-draw various UI components, and frequently-firing properties can bog down the editor

add more effects like noise removal

I have a question, will it soon be possible to apply audio effects similarly to how we applied sound effects?

I’m making a voice chat system where if someone is behind a wall, their voice will get muffled. While rewiring one effect isn’t much of a hassle, the connections might start looking like spaghetti once more effects get added.

Instead of putting everything in a linear order, will it soon be possible to have a standalone effect and connect it to an emitter via a wire?

Here’s an image of what I mean:

Yeah, chaining three AudioFader instances really isn’t ideal for recreating the old behavior.

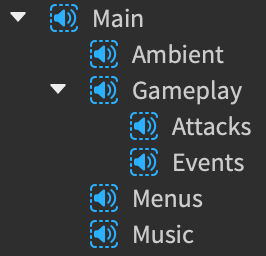

Also, I’d like to request an additional audio instance be added that allows for adjusting the volume of many AudioPlayer instances simultaneously. The use case for this is to recreate the behavior of SoundGroup instances. Multiple Sound instances can be assigned to a single SoundGroup and this allows for easy implementation of things like in-game audio settings for different types of audio, since the single SoundGroup can be used to adjust all Sound instances assigned to it.

Currently, AudioFader instances cannot serve this use case, as piping multiple AudioPlayer istances through a single AudioFader will stack them all on top of each other. We would need some instance that can output into an AudioPlayer and control its output volume (or just add this behavior to AudioFader).

My game has precise audio controls, which includes a “Master” control as well as controls for individual audio types, such as ambient FX, ambient music, UI sounds, and more. This was very easy to setup with the old system. I just created one SoundGroup called “Master” and then the other categories underneath it, and then assign Sound instances to their respective SoundGroup. Under the new system, recreating this behavior is a massive pain.

chaining three

AudioFaderinstances really isn’t ideal for recreating the old behavior

Since .Volume is multiplicative, if you set AudioPlayer.Volume to 3, you’d only need one AudioFader with .Volume = 3 to boost the asset’s volume 9x

I wonder if it would be more useful to have something like an AudioPlayer.Normalize property – is the desire for a 10x volume boost coming from quiet source-material?

Currently,

AudioFaderinstances cannot serve this use case, as piping multipleAudioPlayeristances through a singleAudioFaderwill stack them all on top of each other

SoundGroups are actually AudioFaders under the hood – so it should be possible to recreate their exact behavior in the new api. The main difference is that Sounds and SoundGroups “hide” the Wires that the audio engine is creating internally, whereas the new API has them out in the open.

When you set the Sound.SoundGroup property, it’s exactly like wiring an AudioPlayer to a (potentially-shared) AudioFader.

I.e. this

is equivalent to

Similarly, when parenting one SoundGroup to another, it’s exactly like wiring one AudioFader to another

I.e. this

is equivalent to

If there are effects parented to a Sound or a SoundGroup, those get applied in sequence before going to the destination, which is exactly like wiring each effect to the next.

Things get more complicated if a Sound is parented to a Part or an Attachment, since this makes the sound 3d – internally, it’s performing the role of an AudioPlayer, an AudioEmitter, and an AudioListener all in one.

So this

is equivalent to

where the Emitter and the Listener have the same AudioInteractionGroup

And if the 3d Sound is being sent to a SoundGroup, then this

is equivalent to

Exposing wires comes with a lot of complexity, but it enables more flexible audio mix graphs, which don’t need to be tree-shaped. I recognize that the spaghetti ![]() can get pretty rough to juggle – I used

can get pretty rough to juggle – I used SoundGroups extensively in past projects, and made a plugin with keyboard shortcuts to convert them to the new instances, which is how I’ve been generating the above screenshots. The code isn’t very clean, but I can try to polish it up and post it if that would be helpful!

Hey @MonkeyIncorporated – we recently added a .Bypass property to all the effect instances

When an effect is bypassed, audio streams will flow through it unaltered; so I think you could use an AudioFilter or an AudioEqualizer to muffle voices, and set it to .Bypass = true when there’s no obstructing wall. We have a similar code snippet in the AudioFilter documentation, but we used the .Frequency property instead of .Bypass – I think either would work in this case

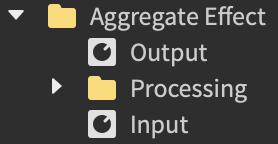

Instead of putting everything in a linear order, will it soon be possible to have a standalone effect and connect it to an emitter via a wire?

A trick that I’ve used is having two AudioFaders act as the Input/Output of a more-complicated processing graph, that’s encapsulated somewhere else

here, the inner Processing folder might have a variety of different effects already wired-up to the Input/Output. Then, anything wanting to use the whole bundle of effects only has to connect to the Input/Output fader(s)

Does this help?

Ah, I suppose that’s a bit better.

More so it’s just coming from a desire to not need an AudioFader where one wasn’t needed previously. Every additional audio instance in the chain requires setting up wires, so minimizing the need for extra instances where possible is ideal.

This image helps a lot. I was trying to apply the group fader before the AudioPlayer in the chain, which isn’t allowed. I didn’t think about applying the group fader between the listener and the output and creating a listener for each group using AudioInteractionGroup, but this makes a lot more sense. Thanks for the tip!

This works well for what I’m doing, thanks for sharing!

Is there any way to get the position of the source that the AudioListener is listening to?

AudioEmitters and AudioListeners inherit their position & rotation from their parent, so you can write a helper method like

local function getCFrameFrom(inst: Instance) : CFrame?

local parent = inst.Parent

if not parent then

return nil

elseif parent:IsA("Model") then

return parent.WorldPivot

elseif parent:IsA("BasePart") then

return parent.CFrame

elseif parent:IsA("Attachment") then

return parent.WorldCFrame

elseif parent:IsA("Camera") then

return parent.CFrame

else

return nil

end

end

Just to confirm - is it not possible to read the RmsLevel property of an AudioAnalyzer on the server side? It seems to read 0 both in the demo place and in my own testing.

My use case is getting a player’s microphone input volume - right now I’m using an UnreliableRemoteEvent to share this data with the server, but I presume the latency for reading this on the server might be lower if this information is already sent with the voice input (unless it isn’t, I’m just guessing).

Let me know your thoughts

Just to confirm - is it not possible to read the RmsLevel property of an AudioAnalyzer on the server side? It seems to read 0 both in the demo place and in my own testing.

Since nobody can hear audio on the server, all audio processing is disabled to conserve CPU & Memory – this also causes AudioAnalyzer to only work client-side.

right now I’m using an UnreliableRemoteEvent to share this data with the server

That sounds like a great solution ![]()

I presume the latency for reading this on the server might be lower if this information is already sent with the voice input

Honestly it might be a wash – your own voice (i.e. AudioDeviceInput.Player == LocalPlayer) is ahead of the server and/or any other clients. So if you have each client send only their own RmsLevel to the server, it might arrive at around the same time as the actual voice packets

Ah alright. Thank you for the insightful reply ![]()

Is there any way to directly connect player audio input into an audio analyzer instead of using an audio listener?

Yes; AudioDeviceInput can be wired directly to an AudioAnalyzer without going through an intermediate AudioEmitter & AudioListener – emitters and listeners are only necessary if you want it to be part of the 3d world.

E.x.

local function findDevice(forPlayer : Player) : AudioDeviceInput?

return forPlayer:FindFirstChildWhichIsA("AudioDeviceInput")

end

local function wireUp(source : Instance, target : Instance) : Wire

local wire = Instance.new("Wire")

wire.SourceInstance = source

wire.TargetInstance = target

wire.Parent = target

return wire

end

local device = findDevice(game:GetService("Players").LocalPlayer)

if device then

local analyzer = Instance.new("AudioAnalyzer")

analyzer.Parent = device

wireUp(device, analyzer)

end

This would only get the position of the AudioListener’s parent correct? So is it possible to get the position of where the AudioListener picked up the sound.

For example, let’s assume all sound including the player has an emitter with the same AudioInteractionGroup. If an AudioEmitter parented to a part emits sound and the player emits sound, would I be able to get the position where the audio played from? In this case, the part position as well as the player position.

GetConnectedWires seems to be a way to get a source instance but that doesn’t work if I have my AudioListener set to listen through an AudioInteractionGroup. There isn’t the ability to retrieve where the sound is played from unless I manually wire it.