Oh yeah, one more question, does :ConnectParallel() reuse Lua threads like :Connect() does without wait()? How about if we call synchronize()?

Right now it doesn’t. We may introduce a small thread pool there later.

It seems odd to me that the debugger doesn’t work at all in Actor-parented scripts in this developer build. I understand it not working in parallel or desynchronized units but shouldn’t it still function in normal code?

My best guess is that it just disables it outright because there’s no way to know ahead of time what code will and won’t be multithreaded and afaik the debugger actually changes the bytecode so it’s an either/or situation.

It would certainly be less work to make it work in non-parallel code, it just requires attaching the debugger to all actor-specific VMs and we haven’t quite done that yet either.

Hi, I have a little question which might seem out of place, but since you’re currently looking into raytracing with 2D grid canvases composed of ScreenGui Frames, wouldn’t it be more efficient to have a new instance, something like a UVCanvas to do rendering on? Since we already have UIGradients to do partial pixel / canvas editing, wouldn’t this be something you can just take and make its own instance of? This would open up the door to do raytracing and even shader engine creation on an even larger scale.

Edit: In case you’re worried about the moderation aspect, just like builds in game can’t be moderated until reported, games like Free Draw allow you to draw on a canvas without passing through Roblox’s image moderation system. Or you can introduce it to a handful of developers just like with the video feature.

They sorta answered this question in earlier posts, they cant have something like this as it would let users bypass moderation/the image moderation system, but if they make that api harder to understand it would help reduce the chance of that happening, i hope doing that is enough for them to allow it though.

So I’ve been doing some interesting testing with a raytracer program I wrote. It’s really simplistic, just casting rays outwards and getting the color of whatever object it hits and setting the frame’s color accordingly. For the multithreaded part, it gets the number of threads I want to generate and divides the pixels up amongst them into groups, and has each thread render them independently. If you’d like to download the place file here it is:

RaytracemultiThread.rbxl (26.1 KB)

I’ve setup the program with an IntValue in the workspace which controls the number of individual tasks I’m dispatching. (Basically the number of individual threads I’m generating per frame) Something I’ve found is that with my current system, which dispatches a new set of tasks every single frame, the general trend is less threads equals more performance. If I run 100 threads overall, I can get anywhere from an average of 0.5 to 5 FPS less than if I ran 16, which is what my computer is rated for, 16 threads. If I run 4 threads, i can get anywhere from 0 to 0.5 FPS differences overall compared to 16 threads. That may sound small, but keep in mind my FPS was extremely stable over the entire experience, keeping my camera still I would barely get a 0.1+/- wiggle in either direction. It is definitely enough to be significant. If I ran 1 thread instead of 4, I would find no difference in performance, even though I am apparently processing only 2500 Rays in 4 threads instead of 10000 Rays in one thread.

Overall, I think this brings up an interesting question about the intended usage of this API. Should we be using this in the way I demonstrated in my place file, where we are generating a new thread for every set of processes we run, every time we want to run them? or is it a smarter idea to instead create threads by some hacky process like generating a while loop in a ConnectParallel function, keep them handy in the background, and maybe push objects to a compute buffer for those loops to chew up and spit out? I would have originally assumed the former, because that’s what this API seems to support and the latter is very hacky and makes very little sense to me. But on the other hand, in my test cases, I can’t seem to find any good evidence that the former is actually more performant. If anything, at higher thread counts, it’s actually less performant, and the difference between a non-one thread count and a single thread is inconceivably small, if it exists at all.

Alright, finally had the time and energy tonight to give this a try.

Here’s the end result!

ParallelTerrain.rbxl (39.1 KB)

It works by managing an object pool of “chunk-generation” actors. Each actor defines a Size and Position for a chunk of terrain to be generated at.

This is how everything is laid out:

-

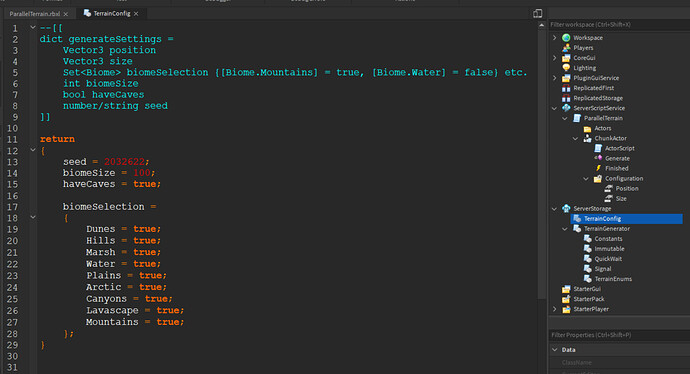

Configuration for the physical Terrain generation is defined in the

TerrainConfigmodule, under theServerStorage:

-

The

ParallelTerrainscript contains parameters for controlling the chunk geneneration and number of initial actors allocated.

Shoutout to the RIDE team for making it extremely easy to convert the existing terrain generator into an infinite terrain chunk generator!

(P.S. This crashes Roblox Studio when the DataModel is closing  )

)

Raycast minimaps are cool. I have implemented some texture to the grass and concrete with noise, which looks nice, but blurs at higher zooms. I’ve noticed there are some memory leaks because over time it gets very laggy but is initially fast.

edit:

just to add, there are 5284 pixels with 331 actors, each actor is filled up with 16 object values linking to a different pixel which a calculates the raycast for and changes the color appropriately. The last actor doesn’t fill up entirely and manages only 4 pixels.

Most recent version significantly improved core utilization on my 6-core CPU. Was previously getting 65-70% utilization, now I’m getting 80-90% utilization.

Oops yeah sorry, this is a regression from memory leak fixes in V3  There’s going to be a V4 after all!

There’s going to be a V4 after all!

A new version (V4) of the builds were uploaded, the links in the original post were updated.

Changes:

- Fix random crashes on exit / when game session is stopped

- Add

task.library to autocomplete and add proper type information - Unlock Part.GetMass, IsGrounded, GetConnectedParts, GetRootPart, GetJoints for use from parallel code

- Increase microprofile render buffer size to make massive multithreaded profiles a bit easier to look at in Studio

Please note that the build version has been updated by our build process to 0.459 instead of 0.460, here’s the latest build’s version - don’t be alarmed!

![]()

This is going to be the last build of the year. Please continue experimenting with the builds and sharing feedback in this thread but be ready for that feedback to be ignored for the next two weeks as we get some needed (and dare I say deserved?) rest.

Hope y’all have a good holiday break and see you in 2021!

I made a system a few years ago that distributes Roblox Studio rendering workloads to local clients in my studio test-server. It gives me a ton more performance.

I rendered this image of a map at 512x512 with 16 Ambient-Occlusion samples & 16 Sun (only light source) shadows.

Then I re-rendered this using my multi-client system and plotted the data.

I’ve got a 12c/24t 4.1Ghz Ryzen 3900x & 32GB 3200hz RAM and I’m only able to utilize half of the cores with multi-threading (green dot on graph). I also run into some sort of bottleneck when I run multiple clients - after 8 or so cores the performance gains start to really diminish. I’d like to note that on all of these tests I never used more than 45% CPU usage.

Learnings:

-Multi-threading doesn’t scale as well as I’d hoped. I had a 6x performance gain in this benchmark, but in a lot of other benchmarks it only gave a 4-4.5x improvement.

-Running ton of studio clients has diminishing returns because of some sort of bottleneck. Perhaps RAM speed or something?

I’m now tempted to see what happens if I combine multi-threading with multi-clients

We’ve never profiled this on an AMD system so we may be misconfiguring the scheduler. You also might be running into some other bottleneck, hard to be sure. We’ll look into this next year. A microprofile capture or two would be welcome though.

Wow! I can see the difference between non-multi threaded version of the voxel terrain generation in BLOX and this version. I will definitely consider trying this build of studio out.

Got bored so i upgraded the rayTracer example to support shadows, fog, light emission and raytraced reflections.

its cool that we can run these things in real time now

Cool Stuff.

I made something like that raytracer a little under a month ago.

Rn I’m working on parts in viewport frames, I’ma see if I can port my code to run in parallel

Really excited for this to come out into beta, this opens so many possibilities and lightning fast processing for multiple operations. ![]()

Out of curiosity, has anyone been able to practically use this for anything that isn’t contrived or ray-based? I know that @Elttob had to scrap their plans to multithread their terrain generation because of the cost of data transfer (or at least that’s what they said on Twitter) and I know that people like @Tomarty aren’t going to get very much use out of it because of its relationship with single-script setups… So what are people actually using this for?

I mean heck, I can’t even use this to speed up my plugin without refactoring it entirely because it’s set up to use one script for everything. I’m not usually one to dismiss new features just because I don’t like their design but… Does this actually fulfill anyone’s use case?