Hi @rickje139,

This is a good question. If you had some “workers” filling the table at one parallel resumption point, then you would be guaranteed that all the workers complete before the next resumption point. So you could safely use the data in a future resumption point. I mention this just so anyone reading my reply doesn’t assume a more complicated solution is always required.

However, you are asking specifically about running a function that depends on the data in the same parallel step. In that situation you need some way to either signal that the work is complete, or have the function that requires the data do some sort of polling (i.e. “yielding/deferring” until the work is complete). I think the best option is likely to track how much work is completed and then notify the function when all the work is completed.

Here is an example script that takes this approach. It uses Actor messaging to send a message so that the function that requires that data can execute when all the table results have been written to the table. It also uses SharedTable.increment to safely increment a counter safely from multiple actors.

-- This script assumes it has not been parented to an Actor (it automatically parents clones of itself to Actors)

local numWorkers = 8

local actor = script:GetActor()

if actor then

actor:BindToMessageParallel("DoWork", function(workIndex, resultTable, actorToMessageOnCompletion)

-- Do some work to compute a "result"

-- For this very simple example a string is generated. In theory any data that could be stored in a

-- shared table is possible.

local result = "result:" .. workIndex * workIndex

resultTable[workIndex] = result

-- Increment 'numResults' to track how much work has been completed

local resultCount = SharedTable.increment(resultTable, "numResults", 1) + 1

-- If all work has been completed, then have the last worker signal 'actorToMessageOnCompletion' indicating

-- the work is complete.

if resultCount == numWorkers then

-- Send a message that all work is complete

actorToMessageOnCompletion:SendMessage("ProcessingComplete", resultTable)

end

end)

actor:BindToMessageParallel("ProcessingComplete", function(resultTable)

-- When this callback is called, all of the workers will have completed their work

print("Received table with results from parallel actors")

assert(resultTable.numResults == numWorkers)

print(resultTable)

end)

else

-- This is the codepath for the "main" script. It will perform initialization and create workers

-- Create a shared table with a counter for the amount of work completed initially set to 0.

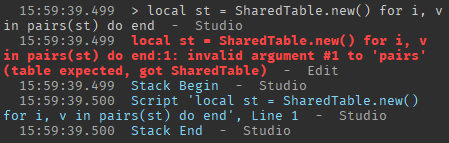

local resultTable = SharedTable.new({numResults = 0})

-- Create child actors to do the work

local workerActors = {}

for i = 1,numWorkers do

local workerActor = Instance.new("Actor")

workerActor.Parent = workspace

local cloneScript = script:Clone()

cloneScript.Parent = workerActor

table.insert(workerActors, workerActor)

end

-- Send a message to all workers so they begin working. We select the first child (arbitrarily) as the one that will

-- be sent a message when all the work is complete. Some other actor, even one that is not a worker, could be used instead.

for index, childActor in workerActors do

childActor:SendMessage("DoWork", index, resultTable, workerActors[1])

end

end

To make it easy to test this for yourself, I put all the code in a single script. But in practice it would probably be cleaner to split this into at least two separate scripts.

Also, although I used Actor messaging to notify the function that all the work is complete, other mechanisms could be used (e.g. a BindableEvent). Please consider this as an example and not the only possible solution to this problem.