Are the chunk generators running in actors, or on the main core? Because my generating function uses 60% of the core, so it’s impossible for more than 2 chunks to generate at the same time on the same core

They are running in actors. I have a shared pool of 24 actors and if at any point all of them are running then the main thread stops producing jobs until one is available. This is the only part of my code that “waits” for anything.

Thats exactly what I’m trying to do, however it seems that if one job is started then all other jobs will stop and wait for the first job to finish. I can send here all the code for the thread manager if it’d help us debug it

I’ve tried to debug it further, but it seems like the scripts running inside actors block every other core for some reason. I’ll continue debugging it further tommorrow.

I’ve tried debbuging it further, but I found no fix, this may be a bug in roblox’s multi threading?

Still no fix found, can anyone help me, or am I doing something fundamentally wrong about Actors?

May this be the problem,

if x % 5 == 0 then

task.wait()

end

making it jump to serial instantly? How else am I supposed to use task.wait?

You should avoid task.wait in actors

Is there any way to fix this? adding task.desynchronize() after the task.wait() makes it 200ms slower, and wait switches to serial lua the same as task.wait, heartbeat may work. How can I prevent my loops from crashing without waits?

You still want task.desynchronize() at the start, try just removing the wait. I have seen other instances of parallel lua not stalling high CPU usage. If it does stall and kill the thread try splitting up your chunks into smaller pieces and higher distribution with more actors.

I’ve changed the size of chunks to 6x320x6, however it still seems like the client has a ping of 4000 when generating chunks, does it mean one of the actors is on the main thread?

Also it takes 0.06 seconds now to generate a chunk, and ~0.8 seconds to generate 16 chunks, but should’t it take 0.1 seconds, since it generates all the chunks at the same time? Or am I thinking it wrong?

generating this many parts will still cause a high receive rate and thus high ping. the server has to update the client about each part you are modifying. If all the chunks are in separate actors it should run at the same time if you have the CPU cores to do so

I’m not sending the parts to the client, this is just for generating chunk data. After all of this generation then it sends parts to the client, however what I want to optimise is the chunk generation.

Had the same issue, couldn’t find a fix. I have a nice algorithm but takes 6 minutes to calculate the geometry with and without coroutines.

First thing you should do if you haven’t yet is open the microprofiler and see if the tasks are actually being distributed among different threads. It should look something like this where there are multiple layers of processes:

If you don’t see something similar to that, continue reading. Otherwise, it’s probably a problem with parallelization itself, and I’ll still help you with that.

This is a red flag. Parallelization is on a per-actor basis, and using it inside the module itself could be the problem. I’ve used Parallel Luau before, and the way I did it is by putting the entire to-be-parallelized code inside the actor script, and not in a module. This also means that the thread is desynchronized in the script directly and not from a module function.

For comparison, here’s my own multithreaded terrain generator that has a similar framework to yours:

And at the bottom is a snippet of the actor scripts in my project:

Summary

...

instructEvent.Event:ConnectParallel(function(instruction: taskInstruction)

print(`Processor {id} received a new task`)

local encodeds: {string} = {}

for k, corner: Vector3 in instruction.corners do

local voxelDim: number = wCFG.lodVoxelDims[k]

local chunkDim: number = wCFG.lodChunkDims[k]

local scalarField: Tensor<boolean> = Tensor.new() --these are random names lol

local surfaceMap: Tensor<boolean> = Tensor.new()

local function getVoxel(x: number, y: number, z: number): boolean

local filled: boolean? = scalarField:get(x, y, z)

if filled == nil then --if the voxel doesn't exist, make one and store it

filled = perlin.noiseBinary(corner.X+x*voxelDim, corner.Y+y*voxelDim, corner.Z+z*voxelDim)

scalarField:set(x, y, z, filled::boolean)

end

return filled::boolean

end

local function isSurfaceVoxel(x: number, y: number, z: number): boolean --just check to see if the voxel is next to air (nothing)

if not getVoxel(x+1, y, z) then return true end

if not getVoxel(x-1, y, z) then return true end

if not getVoxel(x, y+1, z) then return true end

if not getVoxel(x, y-1, z) then return true end

if not getVoxel(x, y, z+1) then return true end

if not getVoxel(x, y, z-1) then return true end

return false

end

for x = 1, chunkDim do

for y = 1, chunkDim do

for z = 1, chunkDim do

if getVoxel(x, y, z) and isSurfaceVoxel(x, y, z) then

surfaceMap:set(x, y, z, true)

end

end

end

end

encodeds[k] = tensorEncoder.encodeBinaryTensor(surfaceMap)

end

local _r: taskResult = {

id = instruction.id,

encodedTensors = encodeds

}

returnEvent:Fire(_r)

end)

...

As you can see, everything is in the actor script, aside from the bare essential modules like the Perlin noise generator and the Tensor data structure I’m using to store voxels.

Next point:

This can also be a problem if it wasn’t already. You’re not supposed to use task.wait in desynchronized threads, because by definition it will just involuntarily put the thread back in synchronized mode, because of how the task scheduler works. If your parallelized code depends on task.wait to function, then I’m afraid you have to rewrite it so that it doesn’t.

@ClientCooldown also has a good point; you should minimize changing the synchronization state of the threads as that can be a big bottleneck in your code. Perform all the parallel tasks together at the same time, temporarily store their results, and then resynchronize the thread and do what you need to do in serial.

I’m running it on the server so I have a limited microprofiler, but from what I remember around debbuging a few days ago, there was only one process layer that said 12 Scripts (or something like that), and I had 12 actors so I assumed it was that, but Ill look again tommorrow and tell you

I’ve fixed this, and removed all task.waits from my code

I’ve added all of the code inside the module script and my perlin noise module, but it seems to perform the same, note that each actor had a independent module script, so they are not sharing the same module script, but I still decided to try the usual way

I tried to implement this, Im not sure if I did it correctly, but I will send tommorrow the new code and the microprofiler from the server. Thank you for your help!

Here are some images of the server microprofiler:

Here is an image of these 7 things closer (They are pretty much the same)

Here is some big thing that takes up to 1600 ms frame times, I think this is the problem, since it uses a lot of cpu and it isn’t stacked like your image:

I can also send you the HTML if you would need it

This is the terrain script:

local Emitter = script.Parent.Emitter

local Receiver = script.Parent.Receiver

function PerlinNoise(coords,amplitude,octaves,persistence)

coords = coords or {}

octaves = octaves or 1

persistence = persistence or 0.5

if #coords > 4 then

error("The Perlin Noise API doesn't support more than 4 dimensions!")

else

if octaves < 1 then

error("Octaves have to be 1 or higher!")

else

local X = coords[1] or 0

local Y = coords[2] or 0

local Z = coords[3] or 0

local W = coords[4] or 0

amplitude = amplitude or 10

octaves = octaves-1

if W == 0 then

local perlinvalue = (math.noise(X/amplitude,Y/amplitude,Z/amplitude))

if octaves ~= 0 then

for i = 1,octaves do

perlinvalue = perlinvalue+(math.noise(X/(amplitude*(persistence^i)),Y/(amplitude*(persistence^i)),Z/(amplitude*(persistence^i)))/(2^i))

end

end

return perlinvalue

else

local AB = math.noise(X/amplitude,Y/amplitude)

local AC = math.noise(X/amplitude,Z/amplitude)

local AD = math.noise(X/amplitude,W/amplitude)

local BC = math.noise(Y/amplitude,Z/amplitude)

local BD = math.noise(Y/amplitude,W/amplitude)

local CD = math.noise(Z/amplitude,W/amplitude)

local BA = math.noise(Y/amplitude,X/amplitude)

local CA = math.noise(Z/amplitude,X/amplitude)

local DA = math.noise(W/amplitude,X/amplitude)

local CB = math.noise(Z/amplitude,Y/amplitude)

local DB = math.noise(W/amplitude,Y/amplitude)

local DC = math.noise(W/amplitude,Z/amplitude)

local ABCD = AB+AC+AD+BC+BD+CD+BA+CA+DA+CB+DB+DC

local perlinvalue = ABCD/12

if octaves ~= 0 then

for i = 1,octaves do

local AB = math.noise(X/(amplitude*(persistence^i)),Y/(amplitude*(persistence^i)))

local AC = math.noise(X/(amplitude*(persistence^i)),Z/(amplitude*(persistence^i)))

local AD = math.noise(X/(amplitude*(persistence^i)),W/(amplitude*(persistence^i)))

local BC = math.noise(Y/(amplitude*(persistence^i)),Z/(amplitude*(persistence^i)))

local BD = math.noise(Y/(amplitude*(persistence^i)),W/(amplitude*(persistence^i)))

local CD = math.noise(Z/(amplitude*(persistence^i)),W/(amplitude*(persistence^i)))

local BA = math.noise(Y/(amplitude*(persistence^i)),X/(amplitude*(persistence^i)))

local CA = math.noise(Z/(amplitude*(persistence^i)),X/(amplitude*(persistence^i)))

local DA = math.noise(W/(amplitude*(persistence^i)),X/(amplitude*(persistence^i)))

local CB = math.noise(Z/(amplitude*(persistence^i)),Y/(amplitude*(persistence^i)))

local DB = math.noise(W/(amplitude*(persistence^i)),Y/(amplitude*(persistence^i)))

local DC = math.noise(W/(amplitude*(persistence^i)),Z/(amplitude*(persistence^i)))

local ABCD = AB+AC+AD+BC+BD+CD+BA+CA+DA+CB+DB+DC

perlinvalue = perlinvalue+((ABCD/12)/(2^i))

end

end

return perlinvalue

end

end

end

end

local availablePositions = {

Vector3.new(0, 1, 0),

Vector3.new(1, 0, 0),

Vector3.new(0, 0, 1),

Vector3.new(-1, 0, 0),

Vector3.new(0, 0, -1),

Vector3.new(0, -1, 0),

}

--task.desynchronize()

local function toRun(chunk, position, extras)

local chunkSize, seed, scale, amplitude, cave_scale, cave_amplitude = unpack(extras)

local blocks = table.create(chunkSize.X)

task.desynchronize()

for x = 1, chunkSize.X + 1 do

if not blocks[x] then blocks[x] = table.create(chunkSize.Z) end

local real_x = position.X * chunkSize.X + x

for z = 1, chunkSize.Z + 1 do

if not blocks[x][z] then blocks[x][z] = table.create(chunkSize.Y) end

local real_z = position.Z * chunkSize.Z + z

for y = 1, chunkSize.Y + 1 do

local real_y = position.Y * chunkSize.Y + y - 1

local cave_density = PerlinNoise({real_x, real_y, real_z, seed}, cave_scale) * cave_amplitude

local block = {

--position = Vector3.new(real_x, real_y, real_z),

material = "unknown",

}

if real_y > 3 then

if cave_density > 25 then

block.material = "air"

else

local density = y + PerlinNoise({real_x, real_y, real_z, seed}, scale, 4) * amplitude -- (amplitude + chunk.splineValue)

--if 150 > (density - chunk.splineValue) then

--if density < 130 then

if density < 135 then

block.material = "stone"

else

--block.light = 0

block.material = "air"

end

end

else

if real_y == 1 then

block.material = "barrier"

else

local barrier_density = (PerlinNoise({real_x, real_y, real_z, seed}, 5) * 100) + real_y

if math.abs(barrier_density) > 2 then

block.material = "barrier"

end

end

end

blocks[x][z][y] = block

end

end

--[[if x % 5 == 0 then

task.wait()

--task.desynchronize()

end]]

end

for x = 1, chunkSize.X + 1 do

for z = 1, chunkSize.Z + 1 do

for y = 1, chunkSize.Y + 1 do

local block = blocks[x][z][y]

if block.material ~= "air" then

--[[local topBlock = blocks[x][z][y + 1]

if not topBlock or topBlock.material == "air" then

block.visible = true

end]]

else

for _, position in ipairs(availablePositions) do

local real_x = x + position.X

local real_y = y + position.Y

local real_z = z + position.Z

if blocks[real_x] and blocks[real_x][real_z] then

local neighbor = blocks[real_x][real_z][real_y]

if neighbor and neighbor.material ~= "air" then

neighbor.visible = true

end

end

end

end

end

end

--[[if x % 5 == 0 then

task.wait()

--task.desynchronize()

end]]

end

return blocks

end

Receiver.Event:Connect(function(threadId, name, arguments)

local result = toRun(unpack(arguments))

Emitter:Fire(threadId, result)

end)

and this is how I’m creating the actors:

local ThreadManager = {}

local Thread = {}

Thread.__index = Thread

local HttpService = game:GetService("HttpService")

local ThreadsFolder = game:GetService("ServerScriptService"):FindFirstChild("Threads")

if not ThreadsFolder then

ThreadsFolder = Instance.new("Folder", game:GetService("ServerScriptService"))

ThreadsFolder.Name = "Threads"

end

function ThreadManager.new(threads)

threads = threads or 12

assert(typeof(threads) == "number", "The 'threads' argument must be a number")

assert(threads > 1, "You must chose at least 1 thread")

local threadObject = setmetatable({}, Thread)

threadObject.lastRun = 0

threadObject.threadNum = threads

threadObject.threads = {}

for i = 1, threads do

local newThread = script.Thread:Clone()

newThread.Parent = ThreadsFolder

table.insert(threadObject.threads, newThread)

end

return threadObject

end

function Thread:RunFunction(name, arguments, onFinished)

assert(typeof(name) == "string", "The 'name' argument must be a string")

assert(typeof(arguments) == "table", "The 'arguments' argument must be a table")

assert(typeof(onFinished) == "function" or typeof(onFinished) == "nil", "The 'onFinished' argument must be a function or nil")

--assert(script.Functions:FindFirstChild(name), "function not found")

self.lastRun += 1

if self.lastRun > self.threadNum then

self.lastRun = 1

end

local threadId = HttpService:GenerateGUID(false)

local threadUsing = self.threads[self.lastRun]

local emitterEvent ; emitterEvent = threadUsing.Emitter.Event:Connect(function(receivingId, ...)

if threadId == receivingId then

emitterEvent:Disconnect()

if onFinished then

onFinished(...)

end

end

end)

threadUsing.Receiver:Fire(threadId, name, arguments)

end

return ThreadManager

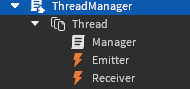

This is the structure of it:

I know it’s a lot to take in, but I don’t really understand how I’m supposed to fix this

Yes it is. It clearly shows the threads all running in serial, one after the other, instead of in parallel where they are all done simultaneously. Well now you know what the exact problem is.

Firstly, have you checked to make sure the tasks are being evenly distributed among the threads? There’s the possibility that a bug made all the tasks only go to one actor and thus it’s still technically running in serial.

You can check for that in the microprofiler too by numerically naming the threads and seeing which ones are working and which ones aren’t. Name them like how I did it here; the actor scripts are called “ChunkThread (number)”:

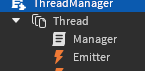

Secondly, why is there a discrepancy with the names? The actor script’s name is “Manager” in the explorer, but in the microprofiler it’s reported as “Script_Manager”. In reality, the names should perfectly match.

I think this is because the server and the client microprofieler are different? There is no script in the game starting with Script_ and I even renamed the script from Manager to Manager (id), and now the microprofiler calls it Script_Manager (id) like in this photo:

I belive the problem lays in one of these scripts:

Terrain builder:

for _, chunk in ipairs(newChunks) do

local start = tick()

Threads:RunFunction("build_chunks", {chunk, chunk.position, extras}, function(blocks)

self.chunks[chunk.position.X][chunk.position.Y][chunk.position.Z].blocks = blocks

finished += 1

warn(tick() - start)

if finished == #newChunks then

onFinished()

end

end)

end

Actor manager:

local ThreadManager = {}

local Thread = {}

Thread.__index = Thread

local HttpService = game:GetService("HttpService")

local ThreadsFolder = game:GetService("ServerScriptService"):FindFirstChild("Threads")

if not ThreadsFolder then

ThreadsFolder = Instance.new("Folder", game:GetService("ServerScriptService"))

ThreadsFolder.Name = "Threads"

end

function ThreadManager.new(threads)

threads = threads or 12

assert(typeof(threads) == "number", "The 'threads' argument must be a number")

assert(threads >= 1, "You must chose at least 1 thread")

local threadObject = setmetatable({}, Thread)

threadObject.lastRun = 0

threadObject.threadNum = threads

threadObject.threads = {}

for i = 1, threads do

local newThread = script.Thread:Clone()

newThread:FindFirstChild("Manager (id)").Name = `Manager ({i})`

newThread.Parent = ThreadsFolder

table.insert(threadObject.threads, newThread)

end

return threadObject

end

function Thread:RunFunction(name, arguments, onFinished)

assert(typeof(name) == "string", "The 'name' argument must be a string")

assert(typeof(arguments) == "table", "The 'arguments' argument must be a table")

assert(typeof(onFinished) == "function" or typeof(onFinished) == "nil", "The 'onFinished' argument must be a function or nil")

--assert(script.Functions:FindFirstChild(name), "function not found")

self.lastRun += 1

if self.lastRun > self.threadNum then

self.lastRun = 1

end

local threadId = HttpService:GenerateGUID(false)

local threadUsing = self.threads[self.lastRun]

local emitterEvent ; emitterEvent = threadUsing.Emitter.Event:Connect(function(receivingId, ...)

if threadId == receivingId then

emitterEvent:Disconnect()

if onFinished then

onFinished(...)

end

end

end)

threadUsing.Receiver:Fire(threadId, name, arguments)

end

return ThreadManager

It uses all 4 actors (I am testing it with 4 so it won’t crash the server), each of them using 1.2 seconds to generate a chunk.

EDIT:

Do you mind if I enable team create or uncopylock the game so you can look in for yourself?

May I ask what are the specs of your computer? How many cores does your CPU have?

1.2 seconds is the total, and each of them uses 0.3 seconds.

That is fine.

I have a I5 10400 (a 6 core CPU), 16GB of RAM, and a gtx 970

Thanks! Here is a link to the game (I uncopylocked it so you can have a look for yourself): Voxel terrain game - Roblox