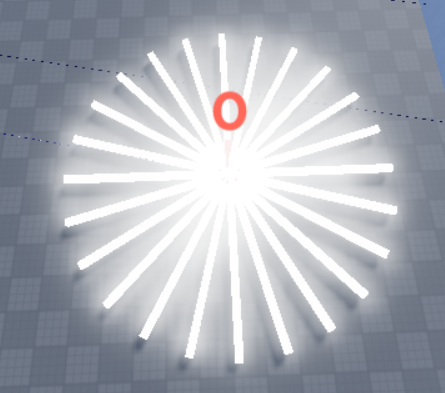

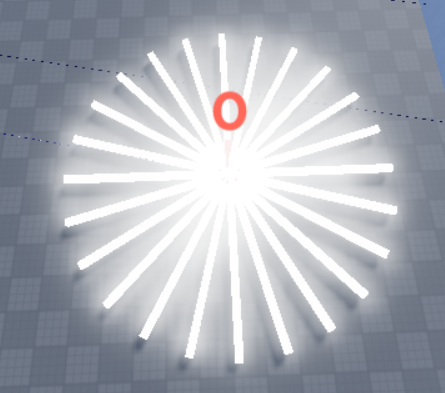

Hi, thanks for the reply. The agents have 15 inputs. The first 12 are little lines that detect things such as bullets or the enemy. Here is an image to demonstrate what I mean:

The last 3 inputs are:

CanShoot - Tells the AI whether it can shoot or not

DifInX - The difference in “X” or really just a standardized absolute value difference in the Z axis.

DifInY - Difference in “Y” or again a standardized absolute value difference in the “X” axis.

What do your weights, which you’re training, represent in this model?

I’m not sure exactly what you mean by this question. Each agent uses a neural network, so the weights are essentially just neurons part of the neural network. Some clarification would help.

Also, I have tried other values for my fitness function. I tried encouraging the AIs to shoot by giving them a small reward when they shot a bullet, but that caused spam-like shooting behavior. My original fitness function was purely based off of points scored, or AKA bullets landed on the enemy. My latest idea was to penalize the agents for each movement to hopefully encourage them to take more efficient movements and discourage spinning in circles and spam shooting bullets. Also, sorry for not providing code earlier. This code might be quite a bit but here it is:

script.Parent.AlignPosition.Position = Vector3.new(script.Parent.Position.X,0.5,script.Parent.Position.Z)

local SS = game:GetService('ServerStorage')

local LastShot = nil

local MovementSpeed = 2.5

local TurnSpeed = 5

local function MoveForward(Amount)

script.Parent.AlignPosition.Position += Vector3.new(script.Parent.CFrame.LookVector.X,0,script.Parent.CFrame.LookVector.Z) * Amount

end

local function MoveBackward(Amount)

script.Parent.AlignPosition.Position -= script.Parent.CFrame.LookVector * Amount

end

local function TurnLeft(Amount)

script.Parent.AlignOrientation.CFrame = CFrame.Angles(0,math.rad(script.Parent.Orientation.Y + Amount),math.rad(-90))

end

local function TurnRight(Amount)

script.Parent.AlignOrientation.CFrame = CFrame.Angles(0,math.rad(script.Parent.Orientation.Y - Amount),math.rad(-90))

end

local function Shoot()

LastShot = os.clock()

local Clone = SS.RedBullet:Clone()

Clone.CFrame = script.Parent.CFrame + script.Parent.CFrame.LookVector * 4

Clone.Parent = workspace

coroutine.wrap(function()

--script.Parent.BillboardGui.Score.Text = tostring(script.Parent.BillboardGui.Score.Text + 0.1)

repeat

if Clone.Parent == nil or Clone == nil then break end

local TouchingParts = workspace:GetPartsInPart(Clone,OverlapParams.new())

for i,v in pairs(TouchingParts) do

if v.Parent.Name == 'Blue' then

Clone:Destroy()

script.Parent.Parent.Parent.Blue.Head.BillboardGui.Score.Text = tostring(script.Parent.Parent.Parent.Blue.Head.BillboardGui.Score.Text - 3)

script.Parent.BillboardGui.Score.Text = tostring(script.Parent.BillboardGui.Score.Text + 3)

end

end

task.wait()

until Clone.Parent == nil or Clone == nil

end)()

end

local function UpdateInputs()

local RetTable = {}

for i = 1,#script.Parent.Parent.Inputs:GetChildren() do

local SelectedPart = script.Parent.Parent.Inputs[tostring(i)]

local TouchingParts = workspace:GetPartsInPart(SelectedPart,OverlapParams.new())

for _,part in pairs(TouchingParts) do

if part.Name == 'BlueBullet' then

table.insert(RetTable,-1)

break

elseif part.Parent.Name == 'Blue' then

table.insert(RetTable,-3)

break

else

table.insert(RetTable,0)

break

end

end

end

if LastShot == nil or os.clock() - LastShot >= 0.25 then

table.insert(RetTable,1)

else

table.insert(RetTable,0)

end

local DifInX = math.abs(script.Parent.Position.Z - script.Parent.Parent.Parent.Blue.Head.Position.Z)

local DifInY = math.abs(script.Parent.Position.X - script.Parent.Parent.Parent.Blue.Head.Position.X)

DifInX = (DifInX - 30) / 30

DifInY = (DifInY - 30) / 30

table.insert(RetTable,DifInX)

table.insert(RetTable,DifInY)

return RetTable

end

local function Sigmoid(x)

return 1 / (1 + math.exp(-x))

end

local function Tanh(x)

return (math.exp(x) - math.exp(-x)) / (math.exp(x) + math.exp(-x))

end

local function Relu(x)

if x > 0 then

return x

else

return 0

end

end

local NumberofInputs = 15

local NumberofHiddenNodes = NumberofInputs + 2

local NumberofOutputNodes = 3

local Rand = Random.new()

local RandomStart = true

local Individuals = {}

local Fitnesses = {}

local Weights = {}

local Stop = script.Parent.Parent.Parent.Stop

local Winner = script.Parent.Parent.Parent.Winner

-- REMEMBER TO INCLUDE BIASES, NOT JUST WEIGHTS

local function RandomizeWeightsBiases()

Weights = {

['In-Hidden1'] = {},

['Hid1-Hidden2'] = {},

['Hid2-Output'] = {}

}

for i = 1,NumberofInputs * NumberofHiddenNodes + NumberofHiddenNodes do

table.insert(Weights['In-Hidden1'],Rand:NextNumber(-3,3))

end

for i = 1,NumberofHiddenNodes * NumberofHiddenNodes + NumberofHiddenNodes do

table.insert(Weights['Hid1-Hidden2'],Rand:NextNumber(-3,3))

end

for i = 1,NumberofHiddenNodes * NumberofOutputNodes + NumberofOutputNodes do

table.insert(Weights['Hid2-Output'],Rand:NextNumber(-3,3))

end

end

script.Parent.Parent.ChangeWeightsAndBiases.Event:Connect(function(WeightsBiases)

if not WeightsBiases then

RandomizeWeightsBiases()

else

Weights = WeightsBiases

end

end)

script.Parent.Parent.RequestWeightsAndBiases.OnInvoke = function()

return Weights

end

script.Parent.Parent.Parent.StartSimulation.Event:Connect(function(WeightsBiases)

repeat

local Inputs = UpdateInputs()

local InHid1 = Weights['In-Hidden1']

local Counter = 1

local HidLay1Vals = {}

local WS = 0

for i,v in pairs(InHid1) do

if Counter < NumberofInputs + 1 then

WS += Inputs[Counter] * v

else

WS += v

Counter = 0

WS = Relu(WS)

table.insert(HidLay1Vals,WS)

WS = 0

end

Counter += 1

end

local Hid1Hid2 = Weights['Hid1-Hidden2']

Counter = 1

local HidLay2Vals = {}

WS = 0

for i,v in pairs(Hid1Hid2) do

if Counter < NumberofHiddenNodes + 1 then

WS += HidLay1Vals[Counter] * v

else

WS += v

Counter = 0

WS = Relu(WS)

table.insert(HidLay2Vals,WS)

WS = 0

end

Counter += 1

end

local Hid2Output = Weights['Hid2-Output']

Counter = 1

local RawOutputVals = {}

WS = 0

for i,v in pairs(Hid2Output) do

if Counter < NumberofHiddenNodes + 1 then

WS += HidLay2Vals[Counter] * v

else

WS += v

Counter = 0

table.insert(RawOutputVals,WS)

WS = 0

end

Counter += 1

end

for i,v in pairs(RawOutputVals) do

RawOutputVals[i] = v / 12

end

local RefinedOutputVals = {}

local FinalOutputs = {}

table.insert(RefinedOutputVals,Tanh(RawOutputVals[1]))

table.insert(RefinedOutputVals,Tanh(RawOutputVals[2]))

table.insert(RefinedOutputVals,Sigmoid(RawOutputVals[3]))

table.insert(FinalOutputs,math.round(RefinedOutputVals[1]))

table.insert(FinalOutputs,math.round(RefinedOutputVals[2]))

table.insert(FinalOutputs,math.round(RefinedOutputVals[3]))

MoveForward(FinalOutputs[1] * MovementSpeed)

TurnRight(FinalOutputs[2] * TurnSpeed)

if FinalOutputs[3] == 1 then

if LastShot == nil or os.clock() - LastShot >= 0.25 then

Shoot()

end

end

task.wait()

until Stop.Value == true

end)

(Code for Red AI, the code for the Blue AI is essentially the same, with just names switched)