I think every programmer has thought about “how to make your own programming language” at least once. In this tutorial, you will learn how to do this (although calling it a new programming language is like creating a new musical instrument that can produce only one sound and is based on another instrument.)

- An interpreter is a program that executes instructions written in a programming language without first compiling them into machine code. Instead, the interpreter analyzes and executes the code line by line, which makes it flexible, but usually less productive than compiled programs.

We will use the Roblox Studio environment to demonstrate the basic principles.

We will create an interpreter in Lua, which itself is interpreted by the Roblox runtime environment (written in C++), which is then compiled into machine code. Lol.

1. The logic of the interpreter

The interpreter executes the code following a certain logic, which consists of several main steps. Each of these steps plays an important role in converting the source code into an executable program.

Stage 1: Lexer

At the first stage, the lexer works. The lexer splits the source code of the program into tokens — the minimum significant elements. For example, in the expression 2 + 2 * 2 the lexer will allocate the following tokens:

2 (number), + (operator), 2 (number), * (operator), 2 (number)

Our lexer will present the data in this format:

Tokens = {

[1] = { -- [1] - Its the first element, not the number 1!!!

["type"] = Type,

["value"] = Value

}

}

For example, the number 2 is converted to a token

{

["type"] = "int",

["value"] = 2

}

It is worth clarifying that the asterisk or slash symbol should in no case be considered a multiplication or division sign. The tokenization stage should simply split the source code into tokens, and then determine at the parsing stage whether it is multiplication or dereference.

But I will use just “/”, “*”, “+”, “-”. I’m not just looking around, but I don’t think that naming tokens with these signs is a good practice

The lexer simplifies further processing by providing structured data to the parser.

stage 2: Parser

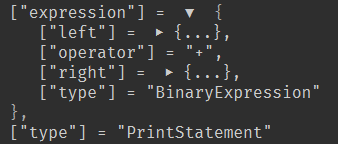

The next step is the parser. The parser accepts a list of tokens issued by the lexer and builds an abstract syntax tree (AST) from them. The AST is a hierarchical structure that reflects the syntactic structure of the program.

For an example of the expression a + b * 3 The AST will look like this:

Step 3: Semantic Analyzer (not used in this tutorial)

The semantic analyzer checks the correctness of the program at the level of meaning. It checks data types, the presence of variables, and other semantic aspects. The implementation of a semantic analyzer is much more complicated than a lexer and a parser, so we omit it in this simplified tutorial. We assume that the source code has already been checked for correctness.

Step 4: Interpreter

At the last stage, the interpreter is working. It passes through the AST and performs the appropriate actions. In our example, it will first calculate b* 3, getting 3b, and then execute a + 3b, returning the final result.

- Thus, each stage — lexer, parser and interpreter — plays a key role in the code processing process. Semantic analysis, although important for full-fledged compilers and interpreters, is omitted in this simplified example for simplicity.

2. Lexer

The lexer is a key component of the interpreter responsible for the lexical analysis of the source code. Its main task is to split the program text into tokens, which represent the minimum significant units of the programming language. This process allows you to prepare the code for subsequent parser processing.

The lexer uses regular expressions (RegExp) to recognize different types of tokens. Regular expressions provide a powerful tool for describing text patterns, which allows the lexer to effectively identify elements such as integers, operators, brackets, and keywords. For example, a lexer can recognize integers using the pattern “%d+”, which corresponds to a sequence of digits.

When creating tokens, the lexer applies a data structure that includes two fields: the token type and its value. This allows the parser to work with well-defined elements, excluding minor characters such as spaces, which are ignored during the tokenization stage. This approach provides a cleaner and more structured processing of the input code.

In addition, the lexer can be easily extended to support new operators or keywords by simply adding the appropriate templates and handlers. This makes it a flexible tool in the development of programming languages and interpreters.

Now let’s look at the implementation of my (too far from the best) lexer in the Luau language:

-- Lexer.luau

local Lexer = {}

Lexer.__index = Lexer

local Token = {}

Token.__index = Token

-- Token constructor

function Token.new(type, value)

return setmetatable({type = type, value = value}, Token)

end

-- Lexer constructor

function Lexer.new()

local lexer = setmetatable({

tokens = {}, -- Array to store generated tokens

patterns = {} -- Array of token patterns

}, Lexer)

-- Add token for integers

lexer:addToken("int", "%d+", function(value) return Token.new("int", tonumber(value)) end)

-- Add tokens for operators

lexer:addToken("operator", "%*", function() return Token.new("operator", "*") end)

lexer:addToken("operator", "%/", function() return Token.new("operator", "/") end)

lexer:addToken("operator", "%+", function() return Token.new("operator", "+") end)

lexer:addToken("operator", "%-", function() return Token.new("operator", "-") end)

-- Add tokens for parentheses

lexer:addToken("operator", "%(", function() return Token.new("operator", "(") end)

lexer:addToken("operator", "%)", function() return Token.new("operator", ")") end)

-- Add token for whitespace (ignored)

lexer:addToken("whitespace", "%s+", function() return nil end)

-- Add token for the 'print' function

lexer:addToken("function", "print", function(value) return Token.new("function", value) end)

return lexer -- Return the created lexer

end

-- Function to add a new token pattern

function Lexer:addToken(type, pattern, handler)

table.insert(self.patterns, {type = type, pattern = pattern, handler = handler})

end

-- Function to tokenize the input string

function Lexer:tokenize(input)

if input == "" then return {} end -- Handle empty input

local position = 1

local length = #input

local tokens = {}

while position <= length do

local matched = false

for _, tokenPattern in ipairs(self.patterns) do

local pattern = tokenPattern.pattern

local match = input:sub(position):match("^(" .. pattern .. ")")

if match then

local token = tokenPattern.handler(match)

if token then

table.insert(tokens, token)

end

position = position + #match

matched = true

break

end

end

if not matched then

error("Unknown character '" .. input:sub(position, position) .. "' at position " .. position)

end

end

return tokens -- Return the array of tokens

end

return Lexer

3: Parser

The parser is the next component of the interpreter, which is responsible for parsing tokens created by the lexer. Its task is to transform a linear list of tokens into a structured representation that will be easier to process for executing program code. The parser analyzes the sequence of tokens, defining syntactic constructions and creating an abstract syntactic tree (AST).

→

→  →

→

(I hope everything is visible)

The parser uses the “look-ahead” method to determine the type of the current token and make an appropriate decision on how to process it. If the token matches the expected type, the parser continues processing; otherwise, an error is generated indicating a mismatch.

-

consume:Checks the current token and moves the position forward if the token matches the expected type. -

ParseExpression, parseTerm, and parseFactor:methods that break expressions into smaller components by processing binary operations and literals.

The parser does its job using different methods to process different types of expressions and operators. It organizes tokens into a hierarchical structure that reflects the syntactic grammar of the language. For example, it can handle arithmetic expressions, operators, and function calls such as print() – this is the only thing I have implemented from the functions in this tutorial

-- Parser.luau

local Parser = {}

Parser.__index = Parser

-- Parser constructor

function Parser.new(tokens)

return setmetatable({ tokens = tokens, position = 1 }, Parser)

end

-- Get the current token

function Parser:currentToken()

return self.tokens[self.position]

end

-- Parse a print statement

function Parser:parsePrintStatement()

local printToken = self:consume("function") -- Consume "function"

if printToken.value ~= "print" then

error("Expected 'print', but got: " .. printToken.value)

end

self:consume("operator") -- Expecting '('

local expression = self:parseExpression()

self:consume("operator") -- Expecting ')'

return { type = "PrintStatement", expression = expression }

end

-- Consume the expected token type

function Parser:consume(expectedType)

local token = self:currentToken()

if token and token.type == expectedType then

self.position = self.position + 1

return token

else

error("Expected token type '" .. expectedType .. "', but got '" .. (token and token.type or "nil") .. "'")

end

end

-- Parse an expression

function Parser:parseExpression()

local left = self:parseTerm()

while self:currentToken() and (self:currentToken().type == "operator" and (self:currentToken().value == "+" or self:currentToken().value == "-")) do

local operator = self:consume("operator")

local right = self:parseTerm()

left = { type = "BinaryExpression", operator = operator.value, left = left, right = right }

end

return left

end

-- Parse a term (handles multiplication and division)

function Parser:parseTerm()

local left = self:parseFactor()

while self:currentToken() and (self:currentToken().type == "operator" and (self:currentToken().value == "*" or self:currentToken().value == "/")) do

local operator = self:consume("operator")

local right = self:parseFactor()

left = { type = "BinaryExpression", operator = operator.value, left = left, right = right }

end

return left

end

-- Parse a factor (handles literals, identifiers, and grouped expressions)

function Parser:parseFactor()

local token = self:currentToken()

if token and token.type == "int" then

local numToken = self:consume("int")

return { type = "Literal", value = numToken.value }

elseif token and token.type == "identifier" then

local idToken = self:consume("identifier")

return { type = "Identifier", name = idToken.value }

elseif token and token.type == "operator" and token.value == "(" then

self:consume("operator")

local expression = self:parseExpression()

self:consume("operator")

return expression

elseif token and token.type == "function" and token.value == "print" then

return self:parsePrintStatement() -- Handling the print function call

end

if token then

error("Unexpected token: " .. token.type)

else

error("Unexpected end of input")

end

end

return Parser

Summary

Updated

local Parser = {}

Parser.__index = Parser

function Parser.new(tokens)

return setmetatable({ tokens = tokens, position = 1 }, Parser)

end

function Parser:currentToken()

return self.tokens[self.position]

end

function Parser:consume(expectedType)

local token = self:currentToken()

if token and token.type == expectedType then

self.position = self.position + 1

return token

else

error("Ожидался токен типа '" .. expectedType .. "', но получен '" .. (token and token.type or "nil") .. "'")

end

end

function Parser:parsePrintStatement()

self:consume("function")

self:consume("operator")

local expression = self:parseExpression()

self:consume("operator")

return { type = "PrintStatement", expression = expression }

end

function Parser:parseExpression()

return self:parseBinaryExpression(1)

end

function Parser:parseBinaryExpression(priority)

local left = self:parsePrimary()

while true do

local token = self:currentToken()

if not token or token.type ~= "operator" then break end

local currentPriority = self:getOperatorPriority(token.value)

if currentPriority < priority then break end

self:consume("operator")

local right = self:parseBinaryExpression(currentPriority + 1)

left = { type = "BinaryExpression", operator = token.value, left = left, right = right }

end

return left

end

function Parser:parsePrimary()

local token = self:currentToken()

if token then

if token.type == "int" then

local numToken = self:consume("int")

return { type = "Literal", value = numToken.value }

elseif token.type == "identifier" then

local idToken = self:consume("identifier")

return { type = "Identifier", name = idToken.value }

elseif token.type == "operator" and token.value == "(" then

self:consume("operator")

local expression = self:parseExpression()

self:consume("operator")

return expression

elseif token.type == "function" and token.value == "print" then

return self:parsePrintStatement()

end

end

error("Неожиданный токен: " .. (token and token.type or "nil"))

end

function Parser:getOperatorPriority(operation)

if operation == "+" or operation == "-" then

return 1

elseif operation == "*" or operation == "/" then

return 2

end

return 0

end

return Parser

3 The Interpreter

- The interpreter is the central component that executes a program written in our language. It takes an abstract syntax tree (AST) created by the parser and performs the appropriate operations based on the structure and values of the tree nodes. The interpreter converts syntactic constructions into semantic actions that can be performed directly.

The main tasks of the interpreter include:

Operator execution: The interpreter handles various types of operators, such as arithmetic operations, assignments, and function calls.

Execution context management: The interpreter also manages the scope of variables and their values, which allows you to perform operations on variables and pass them to functions.

Expression processing: The interpreter analyzes expressions, calculates their values and returns the result.

To execute the program, the interpreter recursively traverses the AST, calling the appropriate functions for each node type. For example, when encountering a node of type PrintStatement, the interpreter will extract the expression, calculate its value and display the result on the screen.

In fact, my implementation is quite trashy. It seems to be more correct to create a node of functions, and already do all the existing Statements inside… Okay, everything is possible within the framework of the manual… (You see, I did the print somehow without thinking. Millet sketched a template)

-- Interpreter.luau

local Evaluator = {}

Evaluator.__index = Evaluator

-- Evaluator constructor

function Evaluator.new()

return setmetatable({}, Evaluator)

end

-- Evaluate the given abstract syntax tree (AST) node

function Evaluator:Evaluate(node)

if node.type == "Literal" then

return node.value -- Return the literal value

elseif node.type == "BinaryExpression" then

local leftValue = self:Evaluate(node.left)

local rightValue = self:Evaluate(node.right)

if node.operator == "+" then

return leftValue + rightValue

elseif node.operator == "-" then

return leftValue - rightValue

elseif node.operator == "*" then

return leftValue * rightValue

elseif node.operator == "/" then

return leftValue / rightValue

else

error("Unknown operator: " .. node.operator) -- Handle unknown operators

end

elseif node.type == "PrintStatement" then

local expression = node.expression

local result = self:Evaluate(expression) -- Corrected spelling from Evaluator to Evaluate

print(result) -- Output the result to the console

else

error("Unknown node type: " .. node.type) -- Handle unknown node types

end

end

return Evaluator

Bonus:

Let’s create a mini Main module that combines all our modules (to make it easier to write code

local LexerModule = require(script.Evaluator.Lexer)

local ParserModule = require(script.Evaluator.Parser)

local EvaluatorModule = require(script.Evaluator)

local Main = {}

Main.__index = Main

-- Main constructor

function Main.new()

local self = setmetatable({}, Main)

self.Evaluator = EvaluatorModule.new()

self.Lexer = LexerModule.new()

self.Parser = nil

return self

end

-- Main interpreter function

function Main:Interpretate(input)

local success, Tokens = pcall(self.Lexer.tokenize, self.Lexer, input) -- Use pcall to handle lexer errors

if not success then

error("Lexer error: " .. Tokens) -- Report lexer errors

return nil

end

self.Parser = ParserModule.new(Tokens)

local success, AST = pcall(self.Parser.parseExpression, self.Parser) -- Use pcall to handle parser errors

if not success then

error("Parser error: " .. AST) -- Report parser errors

return nil

end

local success, result = pcall(self.Evaluator.Evaluate, self.Evaluator, AST) -- Use pcall to handle evaluator errors

if not success then

error("Evaluator error: " .. result) -- Report evaluator errors

return nil

end

return result -- Return the result of the evaluation

end

return Main

So what did we get?

Lets write ServerScript.

local Interpreter = require(script.Parent).new()

Interpreter:Interpretate("print(2 + 5 * 2 - 9)")

5 Results:

In this tutorial, we have reviewed the main components of the interpreter written in the Luau language. We have developed three key components: a lexer, a parser, and an interpreter. Each of these components performs its own unique role in the process of converting source code into an executable program.

The lexer divides the source code into tokens, which represent the minimum significant units of the language.

The parser builds an abstract syntax tree (AST) from tokens, organizing them into a hierarchical structure.

The interpreter performs the operations defined in the AST, returning the results of calculations.

Sorry for my English. I used a translator.