Hi there, is this possible to make it be able to be non attack mode then once the user/player is been caught doing something suspicious it will then do as told?

Oh, alright then, thank you for that feedback! I will go and read up more on this topic. The activation functions were from code I took from your sample programs since they didn’t work on version 1.15. But for the documentation, aside from explaining how deep learning works, there still is a lot unexplained for example, how each object is intertwined with eachother. I had to manually dig up your code to figure out how the OOP structure looked like before I could get the code up and running. I suggest clearing that up further as well as still providing good example code to show how the function or structure could be used, since some functions look like they are supposed to work in one scenario but their intended purpose is another. (i.e I thought :reinforce() was used for the QLearningNetwork object when in reality it was only meant to be used in the quick setup version.)

Hopefully i’ll be back once I clear up my misconceptions on deep learning and reinforcement learning.

Well yes, but if it’s something simple as that, then you might as well use if-statements + Roblox’s pathfinding…

There is more to is its just the fact about if it is compatible since there will be more.

Oh alright then. Just make note that it will take quite a while to train. I recommend you create a hard-coded AI that represent the player or use this library to create AIs that learns which would be representing the player.

Alright, thank you so much. Will try that.

One more thing, do you have any recommended sources for learning Deep Learning and reinforcement learning? Usually I learn best from graphic sources found on youtube, what do you think?

Unfortunately, I don’t have any recommendations that suits your criteria. Majority of the knowledge came from extensive reading from articles and research papers.

But if you really want to understand deep learning from the start, then I’d recommend you look into Andrew Ng Deep Learning courses.

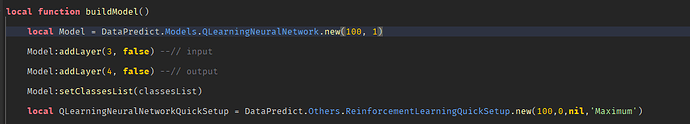

Enable the bias for the first layer.

Then change QLearningNeuralNetwork to DoubleQLearningNeuralNetwork. Apparently the original version have issues according to several research papers.

Assuming you referred to DoubleQLearningNeuralNetworkV2, I switched to that and flipped my first bias to true, however, the results are still the same. Is such a simple task really gonna take a long time to train? or is it that my RL Agent still is not working properly?

Yes. It will take quite a bit of time to train.

I did something similar to yours (the combination of numbers part) and pretty much managed to get 70% accuracy.

That being said, accuracy isn’t the best metric to measure the performance though in reinforcement learning area. We usually use average reward per time step.

Well then, moving on from my old experiment, what about humanoids? I experimented with a genetic algorithm previously that I managed to optimise the time-to-train by letting the Agent spawn and train with multiple NPC Humanoids at the same time. Is it possible for such an approach or similar approach in QLearning/PPO?

In mind, I have an idea for an RL NPC that has a goal of walking towards a point. For example, what if there was a goal node somewhere on the map and an RL Agent needed to figure out how to get there with the NPC. Could I spawn in multiple NPCs for the RL Agent to train with to quicken the time to train or would it be impossible/the same as training with 1 NPC?

Yes there is actually. I purposely designed the library to handle multiple agents at once. But I’ll give you the easiest one that is similar to genetic algorithm. You have to code it yourself since there are so many variations of the environment I need to cover just to implement the functionality.

Here are the steps:

-

Create multiple agents, each have their own model parameters. Also find a place to track total rewards it receives for a given time for each of the agents.

-

Run the agents in the environment and let them collect rewards.

-

After each time interval, copy the agent model parameters that have highest total reward value and load them into other agents. Then reset the total reward values for all agents.

-

Repeat step 2 and 3 until you feel the performance is good.

Just curious, Is this the method you use for your sword-fighting AI? I recognise this from when I was browsing through your code.

Also, by different model parameters, how would I generate new ones or swap them out?

Not really. Now you mentioned it, I think I should do that.

Anyways for the model parameters, just call getModelParameters() and setModelParameters(). These functions are only available for models that inherits the BaseModel class. So you have to look at the API documentation to determine which one. Though, if you’re messing with the NeuralNetwork directly, I’m sure you can call it.

Also the generation of model parameters are automatic upon training.

Nice, and would it be better if I used PPO instead for this exercise? I had read a paper earlier on about OpenAI utilising this algorithm to train their Hide and Seek AI. They quoted that it was beneficial for them compared to traditional Q-Learning and that they would set it as the default Learning Algorithm for their future AI-related projects.

Initially, I did try using the PPO implementation for your library but got confused after hooking up the Actor and Critic models since they did not work when I tried training an agent using it.

What are your thoughts?

I recommend Advantage Actor Critic (A2C) over PPO. I really don’t want to go too much into details, but how the mathematics interacts with the real world matters very much. I don’t think the researchers realized that.

PPO might be more sample efficient than A2C, but it has limitations on where you can implement them.

For general purpose use, stick with A2C.

Also, if you’re confused on how to use actor-critic methods, you can refer to the sword-fighting AIs codes in the main post.

I think for starters ill stick to DoubleQLearning to familiarise myself first then before moving on.

I’ll be back once I get it working!