I think I realised a mistake in your sword-fighting code, I’m not sure if you fixed it yet but relativeRot is likely to slow your training because it compares how close the angles that 2 points face. So, if your NPC and the target face 90 degrees perpendicularly, the relativeRot would be 0, but if the NPC were to face the target directly and the target were not facing the NPC, the relativeRot would not be 0. I’m not sure if this was intentional but here’s a headsup.

Same here as well for ‘rotationValueNeededToFaceTheEnemy’

Here is a corrected version of your script to calculate the RotationError:

-- Function to calculate the angle between two vectors

local function calculateAngle(vector1, vector2)

local dotProduct = vector1:Dot(vector2)

local magnitudeProduct = vector1.Magnitude * vector2.Magnitude

local cosineOfAngle = dotProduct / magnitudeProduct

local angle = math.acos(cosineOfAngle)

return math.deg(angle) -- Convert from radians to degrees

end

-- Function to calculate the required rotation for the NPC to face the target part

local function getTurnAngle(BasePart, targetPart)

local npcPosition = BasePart.Position

local targetPosition = targetPart.Position

-- Calculate the direction vector from the NPC to the target part

local directionToTarget = (targetPosition - npcPosition).unit

-- Get the NPC's current forward direction (assuming NPC is oriented along the Z-axis)

local npcForward = BasePart.CFrame.LookVector

-- Calculate the angle between the NPC's forward direction and the direction to the target

local angle = calculateAngle(npcForward, directionToTarget)

-- Determine if the target is to the left or right of the NPC

local crossProduct = npcForward:Cross(directionToTarget)

if crossProduct.Y < 0 then

angle = -angle -- If the cross product's Y component is negative, the target is to the left

end

return angle

end

Notes are inside incase anyone wants to understand the script

I let it train for a while with the goal of guiding the NPC to the node on the left, and it does do that most of the time but for some reason it makes a huge detour and goes completely away from the node before coming back to it.

How do I fix it? What’s wrong?

Apologies for the late reply. I’ll have a look at it and make changes to it.

2 Likes

First off, you might want to fix the parameters inside .new() for the QLearning. Apparently I missed the error in the documentation and you ended up following the wrong information.

3 Likes

For the Goal variable, does it represent only a single “goal” block of yours or both of them?

1 Like

Sorry for the lack of clarity, the Goal variable is only tied to the part on the right. The part on the left is the part where the NPC spawns at the start or teleports to it once it gets penalised too much.

There should be no reason for the NPC to head to the other block besides the Goal block.

1 Like

To be honest, I think it’s because:

-

Doesn’t have a “none” action so that it stops moving when reaches the goal, which causing the NPC to “overshoot” its movement away from the goal.

-

Seems like NPC didn’t learn to how to fix the mistake whenever it “overshoots”, causing it to go full detour. I guess give more training time would help?

1 Like

Hmm, the NPC does have a ‘None’ Action that makes it run an empty function, and I did let it train for roughly 45-60 mins, i’ll try again for another hour and see what happens then.

Also, Updated the model a bit and working on an autosave function right now that saves the model parameters to a datastore once it does not get respawned after a certain amount of time(does not get penalised too much to be respawned). Does the modified Model look alright? ^

I read somewhere that having a Softmax activation function at the output layer helps in distributing values and providing better results, is that true?

1 Like

Replace 100 with 1 for the first parameter. If I were you, I’ll let the other two parameters empty because it will default values that are recommended by research papers.

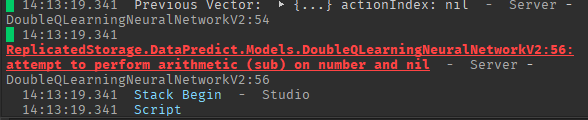

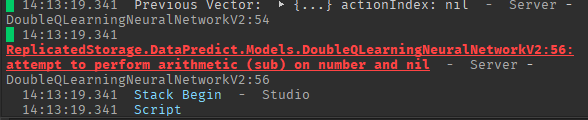

This happens if I don’t keep my values as is, is there something else that’s causing this?

(values become NaN or inf)

Oof, I just realized something wrong with your inputs. You need to do this instead:

environmentVector = {{ 1, currRotationErrorY, currDistanceFromGoal }}

Then set number of neurons for the first layer to 2.

1 Like

Aha! There we go!

Now little timmy is behaving properly as soon as I start training within a few seconds.

All of those 60 mins were wasted

There we go. I’m guessing the bias part is the confusing one, isn’t it?

Yep, sure is. I also have a hard time grasping optimal neural network structures or when to use which activation function. So far trying out Brilliant’s free trial course did help a little.

Well, now that I have learnt how to train a singular agent… what about multiple agents, a MARL(Multi-Agent Reinforcement Learning) approach? Is this possible with your library? How would I go about doing something like this? Perhaps for example a bunch of AIs that maybe work together to solve an issue(i.e the hide and seek AI OpenAI developed). I heard that there are some algorithms that are specifically catered to this MARL approach, do you have any in your library?

And is it possible for the trained model to work with dynamic numbers of NPCs? So if it was originally trained to work with 2 agents and 2 NPCs, could it work with 5 agents and 5 NPCs?

Yah that is possible for MARL. There are different types though: Designing Multi-Agents systems - Hugging Face Deep RL Course

Read up the distributed training tutorial in the API documentation. You need to know about DistributedGradients part. You’re going to have to share the model parameters with the main model parameters.

Also look into extendUpdateFunction() and extendEpisodeUpdateFunction() for ReinforcementLearningQuickSetup.

For your second question, I don’t know lol. Why not experiment it out first?

1 Like

Well, I was thinking of using MARL to create an NPC ecosystem, think of it as a bunch of soldier NPCs working together to patrol base/defend it from player intervention. With this idea in mind, I don’t have much experience to form proper inputs for this sort of AI, do you perhaps know any starter inputs I can give them to train a foundation?

There will be only one base or multiple bases? Because if it is multiple bases, there’s a different set of inputs and actions they will be taking.

Hi guys!

If anyone wants to hire me as a freelance machine learning engineer for your large project, you can do so at Fiverr here:

https://www.fiverr.com/s/W9YopQ

Also, please do share this to your social media! I would greatly appreciate it!

1 Like