Around approximately 25th of June both of my games started experiencing servers crashing.

Servers seem to crash due to high memory, which made it so i originally thought its a memory leak on my side, which lead to further experiments. As a part of that i noticed that the more people are on the server the more likely it is to crash.

Here are both games where i got reports of servers crashing:

First game: OneSkyVed's Trolleybuses Place (indev) - Roblox

With first game its simple, i did not update it in any way at the moment when servers started crashing, meaning it was not caused by my scripts and started happening by itself. Additionally recently i did not get reports of it crashing at all, so maybe in first game it got fixed by itself

Second game: Automatic Moscow metro - Roblox

Second game was the one where i did ALL of my experiments, that means everything i will mention below is related only to second game, as i did not do any experiments in first one

Warning: everything that comes below is not information in which im completely sure, because i have no adequate way to test out what actually causes the crash

I suspect this issue might be caused by opportunistic streaming, because thats the only thing my 2 games have in common.

When i join a big server i notice 2 unusual things:

- UntrackedMemory sometimes uses 1-2gb of memory, but it also drops down over time meaning that when needed it clears itself up(?)

- network/replicator is mostly around 100-300mb, but sometimes it decides to skyrocket and this is when the server crashes. On a screenshot below you can see how it skyrockets and then drops back, sometimes it successfully returns to normal values

Second screenshot below shows how network/replicator looks like when server crashed

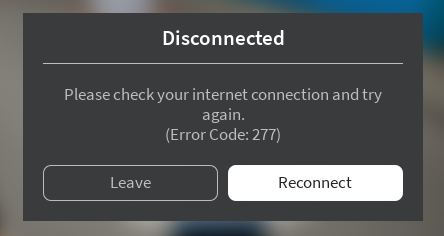

Third screenshot of crash message: (if you try to join a server that crashed it kicks with error code 17)

UPD: after more detailed look, at first something adds up to UntrackedMemory, then when UntrackedMemory starts going down, network/replicator starts skyrocketing. after that it stops and UntrackedMemory goes up again. Over time it becomes too high and crashes the server with next network/replicator spike

UPD2: it seems like server tries to keep track of area that each player loaded before (even if it was unloaded) which with big playercounts seems to create these issues. I will try to do some experiments with reducing amount of instances as a workaround

UPD3: lowering amount of instances did not fix network/replicator spiking, but it certainly did provide more memory for these spikes, so theres a smaller chance of server crashing

I did conduct a bunch of experiments where i completely disabled scripts that handle server/client communication (anything related to remoteevents) and server crashed anyways.

I have no replication steps nor reproduction file as this issue is caused by something going wrong with network/replicator, which is tied to other people spending time in the game. Usually servers crash after around 15-60 minutes after their launch.

Both games have opportunisting streaming enabled