chaining three

AudioFaderinstances really isn’t ideal for recreating the old behavior

Since .Volume is multiplicative, if you set AudioPlayer.Volume to 3, you’d only need one AudioFader with .Volume = 3 to boost the asset’s volume 9x

I wonder if it would be more useful to have something like an AudioPlayer.Normalize property – is the desire for a 10x volume boost coming from quiet source-material?

Currently,

AudioFaderinstances cannot serve this use case, as piping multipleAudioPlayeristances through a singleAudioFaderwill stack them all on top of each other

SoundGroups are actually AudioFaders under the hood – so it should be possible to recreate their exact behavior in the new api. The main difference is that Sounds and SoundGroups “hide” the Wires that the audio engine is creating internally, whereas the new API has them out in the open.

When you set the Sound.SoundGroup property, it’s exactly like wiring an AudioPlayer to a (potentially-shared) AudioFader.

I.e. this

is equivalent to

Similarly, when parenting one SoundGroup to another, it’s exactly like wiring one AudioFader to another

I.e. this

is equivalent to

If there are effects parented to a Sound or a SoundGroup, those get applied in sequence before going to the destination, which is exactly like wiring each effect to the next.

Things get more complicated if a Sound is parented to a Part or an Attachment, since this makes the sound 3d – internally, it’s performing the role of an AudioPlayer, an AudioEmitter, and an AudioListener all in one.

So this

is equivalent to

where the Emitter and the Listener have the same AudioInteractionGroup

And if the 3d Sound is being sent to a SoundGroup, then this

is equivalent to

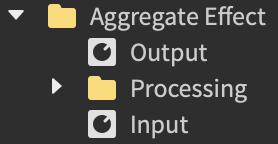

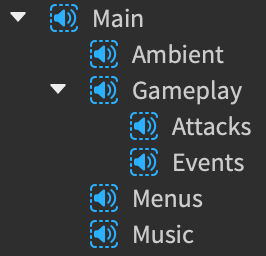

Exposing wires comes with a lot of complexity, but it enables more flexible audio mix graphs, which don’t need to be tree-shaped. I recognize that the spaghetti ![]() can get pretty rough to juggle – I used

can get pretty rough to juggle – I used SoundGroups extensively in past projects, and made a plugin with keyboard shortcuts to convert them to the new instances, which is how I’ve been generating the above screenshots. The code isn’t very clean, but I can try to polish it up and post it if that would be helpful!