So, I am attempting to implement self-play with a D3QN and prioritized experience replay to solve sword-fighting. I’m aware that neural networks are a bit excessive for this task, but I just wanted to get some practice. I am stuck on the reward structure part. I need recommendations for how I should structure the reward function in a way that would encourage the AI to fight the enemy. Rewards should be bounded between [-1,1] for stability purposes. This code pretty much sums up my current reward structure currently:

if NewHealth ~= OldHealth or NewEnemyHealth ~= OldEnemyHealth then

Reward = (NewHealth - OldHealth) / OldHealth - (NewEnemyHealth - OldEnemyHealth) / OldEnemyHealth

elseif NewDistance < OldDistance then

Reward = 0.1 / NewDistance

elseif NewDistance > OldDistance then

Reward = -0.1 * (NewDistance^2 / 2500)

end

local Terminate = false

if Self.Humanoid.Health <= 0 and Enemy.Humanoid.Health > 0 then

Reward = -1

Terminate = true

elseif Self.Humanoid.Health > 0 and Enemy.Humanoid.Health <= 0 then

Reward = 1

Terminate = true

end

What do you think? Should I make the punishment for losing a bit less to encourage more aggressive behaviors? There is definitely a ton of ways to approach this. The self-play implementation is not completely finished yet. I still have to implement policy snapshotting, policy swapping and team changing. I just wanted to see how well agents would learn against a fixed policy after running it for a while. The results, were not so good:

Red = Learning agent, Blue = fixed agent

As you can see, the agents seem fairly random for some reason. Not sure why this keeps happening.

If you like graphs, here are some (although I apologize in advance, I was too lazy to modify the graphing module I was using so it will look a bit broken, lol.)

Loss Graph:

I have this bug where the loss will spike to 100k or even more and the policy I am using (Boltzmann exploration policy) will do some sort of inf / inf operation and cause nan. Not sure where this originates from. This bug is especially annoying because it happens only once in a while. I’m not even 100% sure if my fixes stopped it from happening.

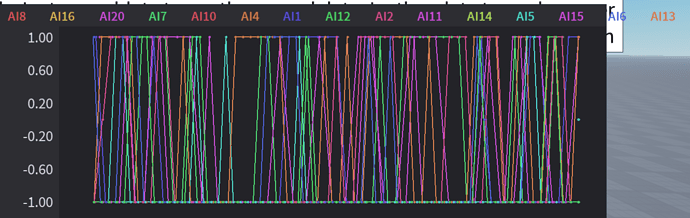

Reward Graph:

As you can see, not great. It looks like pure chaos and there is no way to tell the performance of the agent. I might consider switching to win rate or the ELO rating system later.

If anyone can download the file themselves and see if they can make any improvements in general, that would be appreciated. Here is the file:

wip.rbxl (114.1 KB)