There’s a problem with RegisterAutocompleteCallback and RegisterScriptAnalysisCallback, these are very poweful functions, but they are underutilised because of one core missing feature

No access to the Ast/Lexer

But, what is an Ast or Lexer? A Lexer breaks down a script into usable tokens that are easier to script around, and an Ast takes that Lexer data (called Lexemes) into a syntax tree. (i have no idea how to write an ast)

So why am I complaining about there not being an API for this, thats off-topic. Well, I made my own Lexer thats forked from the Luau source code (for the most part).

Usage

You can download the Lexer module here (6.5 KB)

Then, you can require it in how you’d load in any module. This module probably doesn’t have much use outside of plugins working with the script editor, so I’ll use that as the usage example

Here’s a script that uses analysis callbacks to highlight every string (QuotedString and BrokenString)

local ScriptEditorService = game:GetService("ScriptEditorService")

local Lexer = require(script.Parent.Lexer)

ScriptEditorService:RegisterScriptAnalysisCallback("cat",1,function(req)

local scr = req.script

local tokens = Lexer(ScriptEditorService:GetEditorSource(scr))

local diags = {}

for i, token: Lexer.Token in tokens do

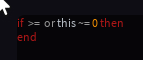

if token.Type == "QuotedString" or token.Type == "RawString" then

local position = token.Position

table.insert(diags, {

range = {

start = {

line = position.start.line,

character = position.start.character + 1

},

["end"] = {

line = position["end"].line,

character = position["end"].character + 1

}

},

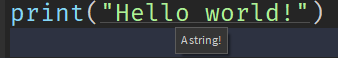

message = "A string!",

severity = Enum.Severity.Information

})

end

end

return {diagnostics = diags}

end)

This script breaks the script down into tokens using the Lexer module, then highlights every string that was tokenised from said script.

Future Plans

Sometime down the road I do want to attempt a go at making a full AST using this as a backend but as I said, I have no idea where to start on that so, for now, I guess the Lexer is good enough.

Attributions

This entire module is referenced from roblox/luau