I’ve been doing a bunch of tests involving getting square roots for numbers, just a little optimization thing I like doing.

And I came upon these unusual results.

local iterations = 1_000_000

function sqrt(n)

return math.sqrt(n)

end

function fast_sqrt(n)

return n ^ .5

end

local sqrt_time = 0

local fast_sqrt_time = 0

warn("== Normal sqrt ==")

math.randomseed(os.time())

local start_time = tick()

local result = 0

for i = 1, iterations do

result = sqrt(math.random(1, 1_000_000))

end

sqrt_time = tick() - start_time

warn("sqrt time: \n", sqrt_time)

warn("== Potentially faster sqrt ==")

math.randomseed(os.time())

local start_time = tick()

local result = 0

for i = 1, iterations do

result = fast_sqrt(math.random(1, 1_000_000))

end

fast_sqrt_time = tick() - start_time

warn("n^0.5 time: \n", fast_sqrt_time)

if fast_sqrt_time < sqrt_time then

print("fast_sqrt was faster!")

else

print("sqrt was faster!")

end

Well aware that this might not be the best way to test performance but here are my results, I don’t know if this might differ from processor to processor.

I tested this on an old laptop with a i7 7th gen, 4 cores and 16gb ram.

11:22:55.104 == Normal sqrt ==

11:22:55.176 sqrt time: 0.07180571556091309

11:22:55.177 == Potentially faster sqrt ==

11:22:55.229 n^0.5 time: 0.05223536491394043

11:22:55.229 fast_sqrt was faster!

This should be 1 million iterations and the random seed is changed with each so that the results vary enough to hopefully not trigger some Luau optimizations / code folding.

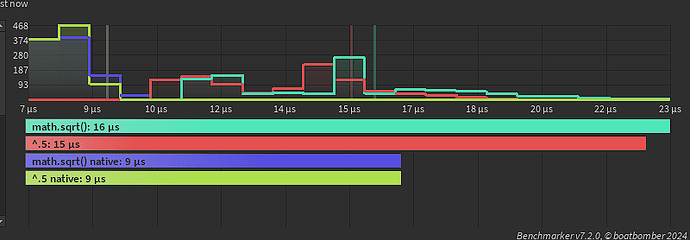

Now, what could cause such a difference in speed?

I should include that math.sqrt(n) sometimes is still faster than n ^ 0.5 but on average it seems that n ^ 0.5 is slightly faster by some micro- or nanoseconds even though both give the same results, I haven’t noticed any loss in accuracy.

But could it be even faster?

This I’ve also been wondering.

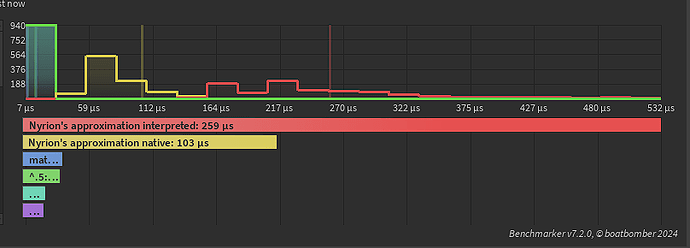

There exists the Fast Inverse Square Root algorithm which was used in Quake I believe.

But this algorithm uses bit-shifting and whatnot and probably wouldn’t be as effective in Luau because it’s interpreted.

I wouldn’t mind some loss in accuracy in exchange for more performance.

If there’s a way to do even faster square roots in Luau it would be awesome.

Why would I want to do this?

Honestly, finding ways to make code fast and efficient is just one of my hobbies I guess.

I’m writing a library and I intend to have some module scripts that all contain optimized / faster / easier alternatives to commonly used functions that I will also end up using myself.

Hopefully Roblox makes native Luau work on the client too soon, but until then I’ll have to do with what’s currenlty possible in Luau and live games.