/!\ - Recently (no idea when precisely), a 3 thread limit was imposed on parallel lua, on the client, for unknown reasons (for servers, they need a high amount of players per server to get more threads). The consequences of this is that the already rare use cases for parallel lua are even rarer now, and I would actually discourage the use of parallel lua, unless you benchmark your code to measure the benefits

My goal with this module was to make a Worker module that encourages proper use of parallel lua while being easy to use and with minimal overhead

This is a 72x128 screen (rendered using 9216 raycasts) that I made using the module

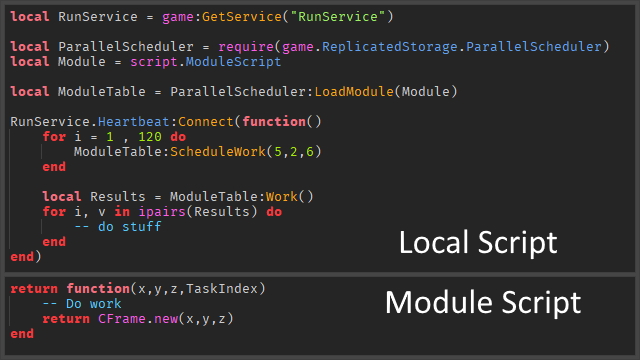

Simple example on how to use it

How to use the module

After requiring the module, use ParallelSheduler:LoadModule(ModuleScript), where ModuleScript is a Module Script that returns a function directly (This is needed to load a function in a different lua VM)

The module script is cloned and placed into a folder under ParallelScheduler, so using script will NOT return where the module script originally was

-- Module Script's structure

return function()

end

The ParallelSheduler:LoadModule() method will return a table containint 5 methods:

ModuleTable:ScheduleWork(…)

This method is used to schedule a task to run when ModuleTable:Work() is called. Arguments passed to this method will be passed to the function from the module script when it will be ran.

Note: An additional argument, TaskIndex is passed to the function (as the last argument) when it runs. That argument represent which task the function is running (task #1 is the first call of ModuleTable:SheduleWork(…), task #2 is the second call, etc)

ModuleTable:Work()

This method runs all the scheduled tasks and yeilds until all the tasks are done. It will return an array with all the results from every task, in the order they were sheduled. If the function return values as a tuple, they will be packed into a table (with table.pack(…))

ModuleTable:Work() SHOULD NOT be called when the previous ModuleTable:Work() is still waiting for every task to be done (It can still be called because in the case the function errors, ModuleTable:Work() will yeild forever)

ModuleTable:SetMaxWorkers(number)

For performance reasons, the module will asign multiple tasks to the same worker (if MaxWorkers is smaller than the amount of tasks)

This method will change how many workers the tasks will use (default 24 on the client and 48 on the server), and deletes workers above the new limit

You don’t really need to use this method, unless you want to modify MaxWorkers during runtime

If multiple modules have been loaded and are in use, it could be beneficial to reduce MaxWorkers as there would be more workers overall

ModuleTable:GetStatus()

This returns a table containing 4 values

ScheduledTasks - How many tasks have been scheduled with ModuleTable:ScheduleWork(…)

Workers - How many workers (aka actors) have been created

MaxWorkers - MaxWorkers lol

IsWorking - true if tasks are being ran, false otherwise

ModuleTable:Destroy()

Deletes everything. Call this when you don’t need the function anymore

How it works

I have tested many different approach, using bindable events/bindable function to send and receive info, the actor messaging api, and shared tables

I ended up using bindable events to fire a Work event and a Result event (start work, and work finished) and Shared Tables (after finding out that they exits…) for sharing information.

However, Shared Tables are much slower than normal tables esspecially when you have nested Shared Tables (from some testing). So, to improve performance, the number of actor is limited and tasks are merged togheter so they can run on the same actor. All the parameters for 1 actor are merged into the same table, with the first index being used to tell which parameter belongs to which task.

I set the actor limit to 24 on the client (per loaded module) (multiple of 2, 4, 6, 8 and 12, so opefully it works well with most cpus) and 48 for the server (probably way too high. I have no idea how many cores the server asigns to a Roblox server)

For some reason, the mircro profiler shows only 8 workers when I have 12 threads

Once the Work event is fired, all the actors start running the function with the provided parameters and inserts the results into a table containing all the results from the tasks a worker was asigned and then put into another Shared Table.

A Shared Table is also used to count down the remaining tasks, when it gets to 0, the Result event is fired. The module then puts all the results in a neat little table that is then returned by ModuleTable:Work()

When using ParalledScheduler:LoadModule(ModuleScript), the ModuleScript is cloned and inserted in a folder under the module. It would work if it required the original module… Hopefully that will become useful for modifying objects when in parallel, by putting them as a child of the module script

Hopefully, Shared Tables could get some optimization and that would reduce some of the overhead with the module

It might be possible to optimize it further by merging parameters into 1 big table, though I think that will return diminishing returns

Performance Tips

-

Don’t bother with it if the work you are doing is not that heavy

For example, I was testing with a script that had 120 tasks creating 11 CFrames with CFrame.lootAt, doing 10 CFrame multiplications and using the module was about the same as running it serial -

Reduce the amount of tasks, and make the tasks do more work/combined work

While the module is made to combine tasks to reduce overhead, it is not entirely gone. Having a couple hundreds of tasks should still be fine, but if you have a loootttt, combine more work into 1 task -

Send less parameters to the function

With the use of the TaskIndex argument, it is possible to avoid sending arguments. This can reduce the overhead when calling ModuleTable:Work(). Has a bigger impact when there are a lot of scheduled tasks

I left a lot of profilebeing() and profileend() functions commented. You can uncomment them to take a look at the performance and the overhead of the module in the micro profiler

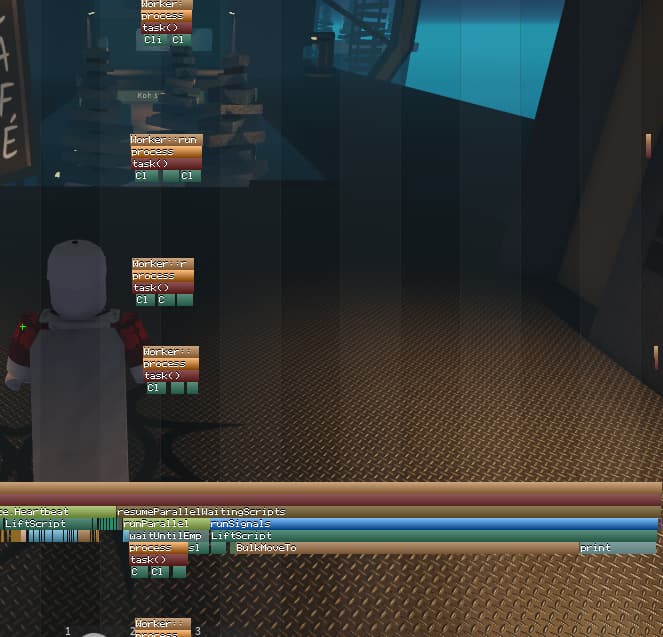

Some nice parallel utilization ![]()

Parallel Scheduler Model

Uncopylocked test place

(The test place contains the raycasting script in StarterGui, and the other CFrame test script in StarterPlayerScripts)

If you encounter any bug, post them as a reply and I will fix them

Hope this module is useful!